Introduction

Nine out of ten enterprises are adopting multicloud solutions—and for good reasons. They gain higher negotiating power with multiple vendors, cost efficiency, best-of-breed services, and reduced risks of dependency and data loss.

However, effectively leveraging multicloud requires impeccable data quality. Multicloud acts like an amplifier: it enhances environments with excellent data quality, but it can also magnify existing data issues. Flawed data can arise from various sources—duplicate entries, inconsistent formats, missing values, or inaccuracies. In a multicloud environment, these flaws can propagate across multiple platforms, aggravating the problems and leading to unreliable insights.

Data quality is crucial everywhere, from spreadsheets to complex platforms. Poor data quality can waste resources and increase costs, damage reputation and customer trust, and undermine the reliability of analytics.

In a multicloud setup, poor data from multiple sources can create significant challenges:

- Security: Inaccurate or incomplete data can lead to misconfigured security settings and missed vulnerabilities, exposing your infrastructure to cyber threats.

- Visibility: Inconsistent data can obscure a clear view of your operations, making it hard to monitor performance and spot issues.

- Integration: Data discrepancies can hinder smooth integration, leading to system incompatibilities and operational inefficiencies.

These data quality challenges can cripple your entire cloud infrastructure, leading to unreliable insights and poor decision-making. How can you tackle this before it becomes a problem? Read on for a simple three-step formula.

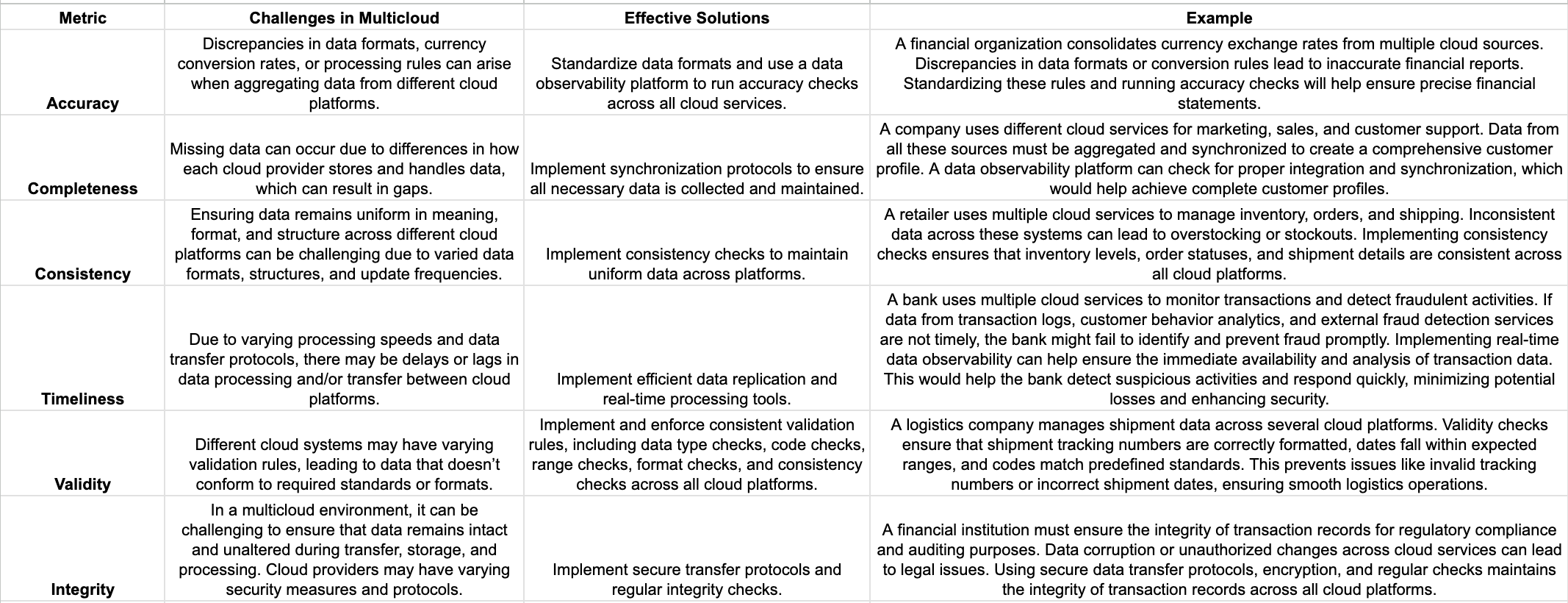

Step 1: Establish Standardized Data Quality Metrics

Below are some metrics and data quality issues that can plague multi cloud environments.

Step 2: Create Data Quality SLAs with Your Cloud Providers

Once you have standardized your data quality metrics, you must establish and maintain acceptable levels for these metrics across all cloud platforms you use. This involves setting clear standards, implementing robust validation and synchronization processes, and conducting regular audits to monitor and uphold data quality.

The best way to accomplish these goals is to create a standard data quality service level agreement (SLA)—a contract between you and your cloud providers. The SLA must establish the level of quality that each metric defined in Step 1 must meet and the consequences if that data quality falls below acceptable levels.

All data quality SLAs should include the following:

Metrics: Defined these in Step 1

Targets: The level of acceptable quality for each metric

Roles and responsibilities: Define the responsibilities of you and your cloud providers. For instance, who takes charge of measuring data quality? Who will drive corrective actions?

Consequences: What happens if the acceptable data quality is not met? Establish fair and reasonable consequences that improve your relationship with the cloud provider.

Reporting: Establish how the metrics will be reported, including frequency and formats.

Step 3: Conduct Data Quality Audits

The next step is to audit data quality to ensure your cloud providers adhere to the SLA. These audits involve systematically reviewing data to spot common multicloud issues, such as discrepancies in data formats, synchronization errors, and inconsistencies across platforms. Next, you must assess the data quality against the predefined acceptable levels in the SLA and identify and address any deviations.

Leveraging AI can expedite this process by automating data validation, anomaly detection, and trend analysis. For instance, in financial services, AI-driven audits can quickly identify discrepancies in transaction records across multiple clouds, preventing potential fraud. Similarly, in healthcare, AI can help ensure the integrity and validity of patient data, facilitating timely and accurate medical decisions.

Key Takeaways

Maintaining data quality in a multicloud environment is challenging due to issues such as inaccuracies, inconsistencies, and data integrity problems.

A three-step framework can tackle these issues: 1) establish standardized data quality metrics, 2) set acceptable quality levels, and 3) conduct regular audits using AI for greater efficiency.

A New Framework for Data Quality: Acceldata's "Shift-left" Approach

Acceldata's shift-left approach enhances data reliability in multicloud environments by integrating observability practices early in the data lifecycle, ensuring that incoming data is both high-quality and trustworthy. Its industry-leading data observability platform makes it a no-brainer.

Acceldata is a category leader in the Everest Group Data Observability Technology Provider PEAK Matrix® Assessment 2024. The platform leverages cutting-edge AI to prioritize alerts, improve data integrity, and provide actionable insights. Its unparalleled predictive analytics provide top-tier protection against fraud. Acceldata is built to scale, supporting vast numbers of transactions without compromising performance.

Schedule a demo of the Acceldata platform and explore how real-time observability can transform your multicloud organization's data quality.

Summary

Multicloud environments can worsen data quality issues. To fix this, businesses should:

- Define clear data quality standards.

- Agree on data quality expectations with cloud providers.

- Regularly check data for problems.

Using AI tools can help find and fix data issues faster.

.png)

.webp)

.webp)