Optimizing enterprise-scale data infrastructure and operation costs is a big challenge that used to easily confound data engineering teams. By using data observability, however, data teams for organizations of all sizes are optimizing their data at scale and achieving incredible ROI on their data investment.

A survey shows that 82% of organizations running cloud infrastructure workloads claim to have incurred unnecessary costs. Gartner also predicts that 60% of infrastructure and operations leaders will incur public cloud cost overruns.

Why do these unnecessary and unexpected data-related costs creep in and hit enterprises out of nowhere? It’s because enterprise data operations are complex, and any mistakes can quickly escalate into millions of avoidable data-related expenses. Data observability can help you save downtime, software, and hardware expenses. At the annual cost of a full-time employee, data observability gives your enterprise over 80X ROI.

Let’s dive deep into understanding how Acceldata’s suite of data observability solutions helped companies like PhonePe, True Digital, and PubMatic to save several million dollars a year as they scaled their data infrastructure.

Data Observability Can Reduce Data Downtime Costs

Your data pipelines become more fragile as your data operations scale. Without data observability, data teams can’t predict and prevent data pipeline problems. As a result, enterprises face unexpected periods of data downtime, which contribute to customer churn and lost revenue opportunities. Data observability wires up your entire data infrastructure to predict and prevent data downtime problems.

Data observability offers a unified view of all your data systems across the entire data lifecycle. This can help you:

- Correlate errors with events across different data pipelines

- Identify data-related problems and investigate their root causes

- Improve data quality, optimize data pipelines, and reduce infrastructure costs as you scale

- Go beyond aggregate data to get better visibility into the health and reliability of data systems

But more importantly, the advanced AI/ML capabilities of Acceldata’s suite of data observability solutions can help you predict and prevent data outages. Preventing data downtime is important because it costs enterprises in terms of a) data teams working around the clock to fix it and b) lost revenue opportunities due to customer churn.

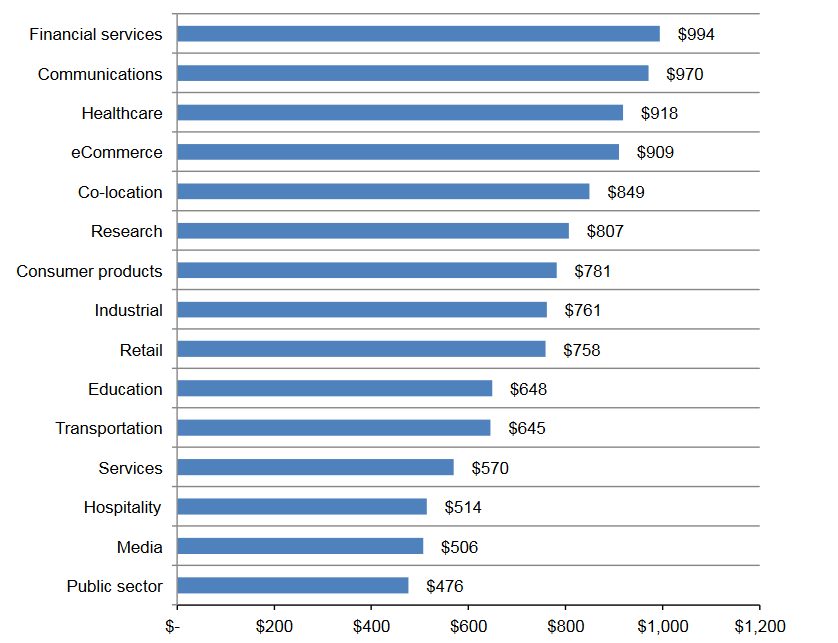

The Ponemon Institute estimates that, on average, every data downtime outage costs enterprises nearly $750,000. Downtime costs for the financial services, communications, and healthcare industries can cost nearly a million dollars per outage.

Source: Ponemon Institute, Cost of Data Center Outages, Bar Chart 4

So, even one such outage can cost your enterprise dearly. And it’s not uncommon for enterprises to face at least one or two outages a year as they scale.

True Digital Went From Frequent Data Downtime Outages to Zero Outages

True Digital, a multinational digital technology company, faced downtime problems. As it scaled, its data infrastructure and operations were struggling to keep pace with the growing user demands. As a result, True Digital faced extensive data system performance issues that regularly left 50% of ingested data unprocessed. With limited visibility into its data pipelines, the company was also constantly fighting multiple system slowdowns and unplanned outages.

After turning toward Acceldata Pulse, the True Digital team was able to reduce the time required to produce critical daily business reports. But more importantly, they haven’t experienced any unplanned outages for over seven months now.

PubMatic Used Pulse to Resolve Its Systemic Data Infrastructure Problems

A similar story played out with PubMatic, a public, fast-growing American ad-tech firm. Its data team was plagued with frequent outages, performance bottlenecks, and high MTTR (Mean Time to Resolution) metrics. This was affecting its core value proposition of providing reliable analytics to its end-users.

In a bid to resolve its systemic data infrastructure problems, PubMatic began using Acceldata Pulse in mid-2020. Pulse immediately improved visibility into PubMatic’s data applications and complex interconnected data systems. This helped its team isolate bottlenecks and automated performance improvements. As a result, the company was able to offer its users reliable customer-facing analytics without any unplanned outages. Read the full PubMatic case study here.

PhonePe Maintained 99.97% Availability as It Scaled Data Infrastructure by 20X

If data outages impact technology companies like True Digital and ad-tech companies like PubMatic, then outages can impact fin-tech companies such as PhonePe even more severely because over 250 million registered users are depending on the platform to process instant payments.

As PhonePe was rapidly acquiring new users, it scaled its data infrastructure by 20X over a single year. However, its data team already had its hands full, firefighting unexpected data issues and infrastructure failures. PhonePe’s Chief Reliability Officer, Burzin Engineer, realized that his team needed tools to improve visibility into the company’s data operations. Without data observability, they risked jeopardizing PhonePe’s growth.

PhonePe implemented Acceldata Pulse in less than a day and immediately began to identify problems with HBase region servers and tables that were under pressure. Pulse helped its data team gain deeper level insights beyond the aggregated information that other tools provided. Along with the automated alerts and easy-to-read dashboards, the PhonePe team was able to identify root cause problems more quickly than ever before.

Pulse helped eliminate day-to-day engineering involvement, firefighting on outages, and performance degradation issues. This meant that its data team could now spend more time scaling its data infrastructure and adding new capabilities. As a result, the company was able to maintain 99.97% availability as it scaled data infrastructure by 2,000%.

“Acceldata supports our hyper-growth and helps us manage one of the world’s largest instant payment systems,” says Burzin Engineer, the Founder & Chief Reliability Officer at PhonePe. He adds, “PhonePe’s biggest-ever data infrastructure initiative would never have been possible without Acceldata.” Read the full PhonePe case study here.

Data Observability Can Reduce Your Software Solution Costs

Proprietary solutions such as Oracle Database, Azure SQL, and Amazon Relational Database Service (RDS) offer managed data infrastructure solutions. For most organizations, in addition to offering agility and scalability, such managed solutions can help save hours of manual upkeep and maintenance, which results in huge cost savings.

However, this may not be true for large enterprises that already serve hundreds of thousands of users and continue to scale operations further. They can end up incurring more costs on fully managed software solutions. In industries such as financial services, healthcare, and communication, enterprises can pay several millions of dollars in annual data infrastructure-related costs. Data observability can help such enterprises switch to open-source technologies and reduce their software/solution costs.

The cost of using proprietary managed solutions for such enterprises is significant enough for VC firms like Andreessen Horowitz to make a case for cloud repatriation. In that opinionated article, authors Sarah Wang and Martin Casado argue that enterprises may be able to save as much as 30% to 50% of their total cloud costs. Whether you agree or disagree, the sheer magnitude of potential cost savings is so large that we can no longer ignore it.

The other problem of using proprietary managed software solutions is the opacity of costs — because cloud infrastructure bills are based on several usage factors that aren’t easy to understand or predict. So, enterprises may receive monthly or quarterly bills with wide cost fluctuations. And more often than not, this includes some degree of unexpected costs.

“Cloud bills can be 50 pages long,” says Randy Randhawa, the Senior Vice President at Virtana, in this Silicon Angle article. “[So] figuring out where [to] optimize is difficult.”

Using Acceldata’s suite of data observability solutions, enterprises can save a lot of money by avoiding unnecessary software licenses and solution costs. “Acceldata’s data observability saved us millions of dollars for software licenses that we no longer need,” says Ashwin Prakash, the Engineering Leader at PubMatic. “Now we can focus on scaling to meet the needs of [our] rapidly growing business,” he adds.

If that sounds like a lot of savings, for a company like PhonePe, the savings on software and solution costs are even larger. The PhonePe team estimates that Acceldata helped them save at least $5 million in annual software licensing costs.

But such savings aren’t just limited to ad-tech and fin-tech companies like PubMatic and PhonePe. Even outside these industries, data observability can help any enterprise that has large data infrastructure needs to optimize costs.

For instance, Acceldata reduced the annual software costs for a multinational technology company like True Digital by over $2 million a year, mainly by identifying overprovisioned and unnecessary software licenses.

“[Acceldata] helped True Digital transition to open-source technologies. [They allowed] us to reduce licensing costs, while delivering mission-critical analytics across the enterprise,” says Wanlapa Linlawan, Acting Head of Analytics & AI Product Technology at True Digital.

Data Observability Can Reduce Infrastructure Costs as You Scale

The flip side of adopting free, open-source technologies such as Hadoop and Spark is that teams will now need to manage the data infrastructure behind them. Overprovisioned, mismanaged, and poorly allocated compute resources can come back to bite enterprises in the form of avoidable infrastructure costs. Often this can escalate to the tune of hundreds of thousands of dollars every year. Data observability can help optimize infrastructure costs and eliminate unnecessary resources.

For a company like PubMatic, its data infrastructure includes 3,000+ nodes, 150+ Petabytes, and 65+ Hortonworks Data Clusters. In addition to this, PubMatic uses Yarn, Kafka (50+ small Kafka clusters with 10–15+ nodes/cluster), Spark, HBase, and the Hortonworks Data Platform. And they are constantly expanding this infrastructure.

In such a massive, large-scale data environment, Acceldata was able to help PubMatic optimize its HDFS cluster and reduce its block footprint by 30%. Acceldata also helped PubMatic to consolidate its Kafka clusters to save infrastructure costs. It also decreased OEM support costs.

Together, all of this helped PubMatic move the numbers on its biggest business metric — reduced cost per ad impression. “[Acceldata] helped us optimize HDFS performance, consolidate Kafka clusters, and reduce cost per ad impression, which is one of our most critical performance metrics,” says Ashwin.

True Digital’s data systems were built to handle more than 500M+ user impressions per month while streaming approximately 69,000 messages per second. To support this level of user activity, its data team manages 100+ nodes, over 8-Petabytes, and a technical environment based on Hadoop, Hive, Spark, Ranger, Kafka, and Hortonworks Data platform.

In such an environment, Acceldata helped True Digital to optimize its HDFS storage by 25%. It also helped True Digital’s data team identify and eliminate unnecessary data. This meant that an 8-Petabyte data lake was sufficient to meet all its critical enterprise analytics requirements.

As a result, True Digital was able to increase its system capacity without needing to scale its data infrastructure. More importantly, as the company scaled, it was able to save over a million dollars of budgeted capital expenditure.

Acceldata also helped PhonePe to reduce its annual data warehouse costs by 65%, as the company scaled from 70 Hadoop nodes to 1,500 nodes.

While each organization has different data infrastructure environments and needs, the benefits are consistent across industries. Data observability can help all large enterprises eliminate avoidable data infrastructure costs.

Data Observability Unlocks the Value of Your Data

Enterprise data teams manually identify, debug, and weed out those systemic data problems. They also stitch together broken data pipelines with clever pieces of code. But is this the best use of data teams?

Data observability eliminates this sort of manual drudgery and unlocks more time for data teams. It automatically monitors data, tracks lineage, performs preventive maintenance, and predicts systemic problems so that your data team isn’t manually debugging unexpected errors or spending hours fixing data pipelines.

As a result, your data team can now concentrate on helping the business scale and develop new opportunities that add direct value to your bottom line.

Book a demo of the Acceldata Data Observability Platform to see how your enterprise save millions of dollars every year and unlock the true potential of your data.

Photo by Scott Graham on Unsplash

.png)

.png)

.webp)

.webp)