There’s no question that bad data hurts the bottom line.

Bad customer data costs companies six percent of their total sales, according to a UK Royal Mail survey. The UK Government’s Data Quality Hub estimates organizations spend between 10% and 30% of their revenue tackling data quality. For multi-billion dollar companies, that can easily be hundreds of millions of dollars per year. Still another estimate by IBM pegs the overall cost of poor data quality for U.S. businesses at $3.1 trillion per year.

The cost of poor quality and unreliable data is set to skyrocket as companies transform into data-driven digital enterprises. Data will be required to underpin even more of their business models, internal processes, and will drive key business decisions. Access to data, therefore, is critical, but ensuring that that data is reliable becomes absolutely mission-critical.

Conventional wisdom says that data quality monitoring tools are the solution. As a result, the market is flush with these solutions. However, with the shift towards distributed, cloud-centric data infrastructures, data quality monitoring tools have rapidly become outdated. They were designed for an earlier generation of application environments, and are unable to scale, they are too labor-intensive to manage, too slow at diagnosing and fixing the root causes of data quality problems, and hopeless at preventing future ones.

It’s important to understand the technical reasons why data quality monitoring tools and their passive, alert-based approach have aged so poorly. And I will argue that rather than choosing a legacy technology, forward-looking organizations should look at a multidimensional data observability platform that is purpose-built for modern architectures to rapidly fix and prevent data quality problems, and automatically maintain high data reliability.

Data Quality: More than Just Data Errors

Let’s start with a refresher of what we mean by data quality. One of the biggest misconceptions about data quality is that it only means clean and error-free data. As my CEO Rohit Choudhary explained, there are actually six critical facets to data quality:

- Accuracy

- Completeness

- Consistency

- Freshness

- Validity

- Uniqueness

In other words, data can be error-free (accurate) yet have missing or redundant elements (completeness) that prevent you from completing analytical jobs. Or your data may be stored using different units or labels, creating (consistency) problems that wreak havoc on calculations. So can stale and out-of-date data (freshness). Or the schemas and structure of your data can differ wildly from dataset to dataset. This lack of normalization (validity) can make your data nearly impossible to aggregate and query together. You want to be able to identify uniqueness in your data sets because it is the most important dimension for establishing that there is no duplication. Measuring data uniqueness is done through analysis and comparison against other data records in your environment. Any data set with a high uniqueness score ensures you will have no — or only minimal — duplicates, and that builds trust in your data.

Here’s a real-world example of poor data quality wreaking havoc despite technically being error free. In 1999, a NASA spaceship to Mars — the Mars Climate Orbiter — was lost due to a data consistency issue. While NASA used metric units, its contractor, Lockheed Martin, used imperial English units. As a result, Lockheed’s software calculated the Orbiter’s thrust in pounds of force, while NASA’s software ingested the numbers using the metric equivalent, newtons. This resulted in the NASA probe dipping 100 miles closer to the planet than expected, causing the Orbiter to either crash on Mars or fly out towards the sun (NASA doesn’t know, as communication with the probe was already lost). And it resulted in the premature end of NASA’s $327.6 million mission.

Too many data quality monitoring tools focus on trying to keep data error free, when there are five other crucial aspects of data quality. Tackling all six of these areas of data quality is essential in order to ensure that your data not only generates the correct quantitative results, but is also the right fit for the use case, and is also ready for analysis.

Data Quality Monitoring: Defensive and Dumb

Data quality monitoring tools have been around for decades, with some emerging in the 80s and 90s with early generations of relational databases and data warehouses, often under the umbrella of Master Data Management (MDM) or data governance. The approach that most of them take has not changed much over the years. Data is discovered and profiled when it is first ingested into a database or data warehouse.

Then a monitoring tool is set to watch the repositories and send alerts to an IT administrator or data engineer if it detects an issue. Hopefully, the DQ monitoring tool catches problems before a BI analyst, data scientist or, worst of all, an executive calls complaining about bad data. Either way, the IT or data engineer then springs into action and starts an investigation to get to the root cause of the data quality problem.

This reactive approach is all about playing defense. It worked fine when data volumes were relatively low, incidents were few, data-driven processes were batch oriented and not mission-critical, and problems simple enough for a data engineer to solve.

Unfortunately, that’s not where most organizations are today. Today’s enterprises are swimming in data. “Some organizations collect more data in a single week than they used to collect in an entire year,” noted our CEO Rohit Choudhary in a recent interview with Datatechvibe.

In the absence of tools that actively ensure data reliability, incidents of bad and unreliable data have increased along with the supply of data. Data also powers more real-time and mission-critical business processes. This runs from driving sales revenue — think of web clickstreams informing e-commerce customer personalization — to automated shipping and logistics systems driven by IoT sensors, and many more examples in gaming, fleet management, social media, and more.

Also, today’s data downtime incidents are more complicated and usually have multiple, long-brewing causes. When that is the case, the Mean Time To Resolution (MTTR) for fixing such complex, holistic data quality problems balloons out. Unfortunately, that is just as companies are getting serious about enforcing data Service Level Agreements (SLAs) with both outside vendors and internal tech and data teams.

Why Bad Data is an Epidemic

Besides the sheer increase in data, there are other reasons why data quality problems are growing both in number and scope. As my CEO Choudhary detailed in another blog, the five reasons are:

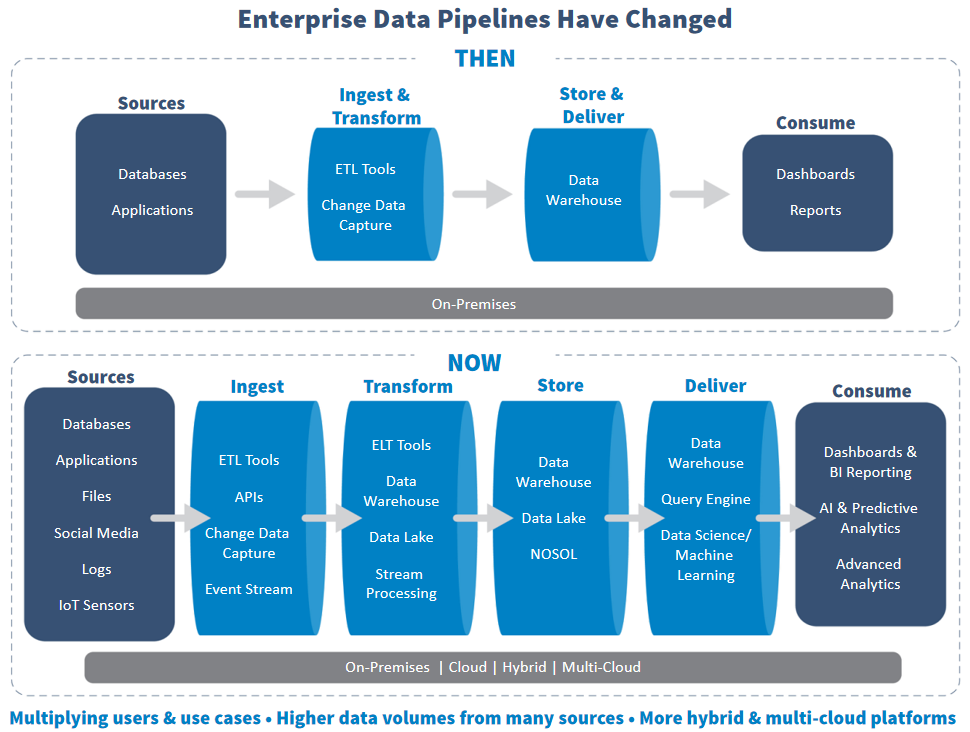

- Data pipeline networks are more sprawling and more complicated than ever, due to the rapid uptake in easy-to-use cloud data stores and tools by businesses over the past decade. The higher velocity of data creates more chances for data quality to degrade. Every time data travels through a data pipeline, it can be aggregated, transformed, reorganized, and corrupted.

Source: The Definitive Guide to Data Observability for Analytics and AI, Eckerson Group

- Data pipelines are more fragile than ever, due to their complexity, business criticality, and the real-time operations they support. For instance, a simple metadata change to a data source such as adding or removing fields, columns or data types can create schema drift that invisibly disrupt downstream analytics.

- Data lineages are longer, too, while their documentation — the metadata that tracks where the data originated, and how it has subsequently been used, transformed, and combined — has not kept pace. That makes it harder for users to trust data. And it makes it harder for data engineers to hunt down data quality problems when they inevitably emerge.

- Traditional data quality testing does not suffice. Profiling data in-depth when it is first ingested into a data warehouse is no longer enough. There are many more data pipelines feeding many more data repositories. Without continuous data discovery and data quality profiling, those repositories become data silos and pools of dark data, hiding in various clouds, their data quality problems festering.

- Data democracy worsens data quality and reliability. As much as I applaud the rise of low-ops cloud data tools and the resulting emergence of citizen data scientists and self-service BI analysts, I also believe they have inadvertently made data quality problems worse, since they by and large lack the training and historical knowledge to consistently handle data well.

The Modern Solution for Data Quality: Data Observability

Data quality monitoring tools and their passive, manual, and unchanged-for-decades approach to data quality can’t cope with today’s environment, where data is highly distributed, fast-moving, and even faster changing. They leave data engineers and other data ops team members consumed with daily firefighting, suffering from alert fatigue, unable to meet their SLAs. Data quality as well as data performance both suffer, while data costs spiral out of control.

Application Performance Monitoring (APM) tools are also not up to the challenge. While APM tool vendors do promise a form of business observability, they are focused, as their name would suggest, around application performance. Data is a mere sideline for APM tools. And when APM tools do look at data, it is again only around data performance, not data quality.

In the real-time data era that we are entering, alerts are too late, and slow is the new down. This era’s solution is data observability, which takes a whole new proactive approach to solving data quality that goes far beyond simple data monitoring and alerts, reducing the complexity and cost of ensuring data reliability.

A multidimensional data observability platform provides the same table stakes monitoring that data quality monitoring tools do. But it makes sure to monitor data quality from every potential angle, rather than giving short shrift to any key facet. Moreover, data observability assumes data is in motion, not static. So it continuously discovers and profiles your data wherever it resides or through whichever data pipeline it is traveling, preventing data silos and detecting early signals of degrading data quality. Finally, data observability platforms use machine learning to combine and analyze all of these sources of historical and current metadata around your data quality.

This imbues data observability platforms with four superpowers:

- Automate tasks such as data cleansing and reconciling data in motion in order to prevent minor data quality problems before they start

- Slash the number of false-positive and other unnecessary alerts your data engineers receive, reducing alert fatigue and the amount of manual data quality engineering work needed

- Predict major potential data quality problems in advance, enabling data engineers to take preventative action

- Offer actionable advice to help data engineers solve data quality problems, reducing MTTR and data downtime

At a larger business level, data observability can help data ops teams meet SLAs that keep revenue-generating data-driven businesses up and running. It can also dramatically reduce cloud fees by enabling businesses to archive unused data and consolidate and eliminate redundant data and processes. And it allows data engineers to be redeployed away from tedious, unprofitable tasks such as manually cleansing datasets and troubleshooting problems caused by unreliable data, to more strategic jobs that boost the business.

Acceldata’s multidimensional Data Observability platform leverages AI to automate data reliability for organizations and reduce all forms of data quality-related downtime. Request a free demo to understand how Acceldata can free your business from the shackles of alert-based data monitoring and enjoy the ML-infused productivity, reliability and cost savings of true multidimensional data observability.

.png)

.webp)

.webp)