There’s no shortage of enterprises still running mission-critical analytics the old-school way: via Hadoop clusters and other on-premises databases and data warehouses.

With the imminent end of Cloudera support for Hortonworks Data Platform (HDP) and Cloudera Data Hub (CDH), many Hadoop users are scrambling for a way to maintain their infrastructure so they can avoid a rushed, risk-filled migration.

In Got Hortonworks or Cloudera? How to Avoid A Disastrous, Costly Forced Migration, we explored how the Acceldata platform and our Hadoop experts can provide a safer, more efficient and less expensive way to continue running CDH, HDP or the open-source version of Hadoop for years to come.

At the same time, our technology and team also work behind the scenes to prepare your infrastructure for an eventual migration to a modern cloud-native database of your choice.

In this blog, I’ll share all of the ways Acceldata can help you make a painless and cost-effective migration to the popular cloud data warehouse, Snowflake. I’ll also detail all of the features of our data observability platform that enable you to optimize the cost performance of your Snowflake-based analytics stack once it’s operational.

Planning and Executing a Six Step Migration

For a successful cloud data migration, dividing this massive project into phases is key. In our view, a six-stage process for migrating from a Hadoop data lake or other on-premises infrastructure to Snowflake is ideal, and would include:

- Proof of Concept

- Preparation

- Data Migration

- Consumption

- Monitoring

- Optimization

Proof of Concept. During the Proof of Concept, the Acceldata Data Observability Cloud — part of our compute performance monitoring module, Acceldata Pulse — provides recommendations for Snowflake best practices and tells you how well you are following them.

After implementing the PoC, Acceldata also provides dashboards and hero reports that help you champion Snowflake by showing its effectiveness.

These include cost intelligence dashboards that help you make smart project budgeting/contract decisions and avoid the TMGT (Too-Much-of-a-Good-Thing) effect: out-of-control op-ex costs that are an all-too-common result of switching to a cloud platform.

I’ll discuss more about how Acceldata can help you optimize your cost-performance lower down in this blog.

Data Preparation. With any mature data infrastructure, pools of dark data and underutilized data stored in overly-expensive ‘hot’ storage tend to abound. That’s especially true for Hadoop data lakes that are used as catch-all repositories for all the company’s data.

Acceldata provides an inventory of all your data assets inside Hadoop or other data technology, along with information around how they are used, their data quality, and other profile information. This helps organizations decide what data to consolidate, retain, and prioritize during migration. You can also get a performance baseline of your existing Hadoop data workloads so that you can set expectations when they are migrated to Snowflake, or measure improvements.

Acceldata also helps organizations best configure their Snowflake accounts for efficiency and security. If desired, we also provide deep dive information for organizations that want to fine tune how their data is clustered, micro partitioned, and more.

Data Migration. Now comes the actual move. As data is ingested into Snowflake, Acceldata provides deep, real-time insights into the performance of Snowpipe and COPY commands.

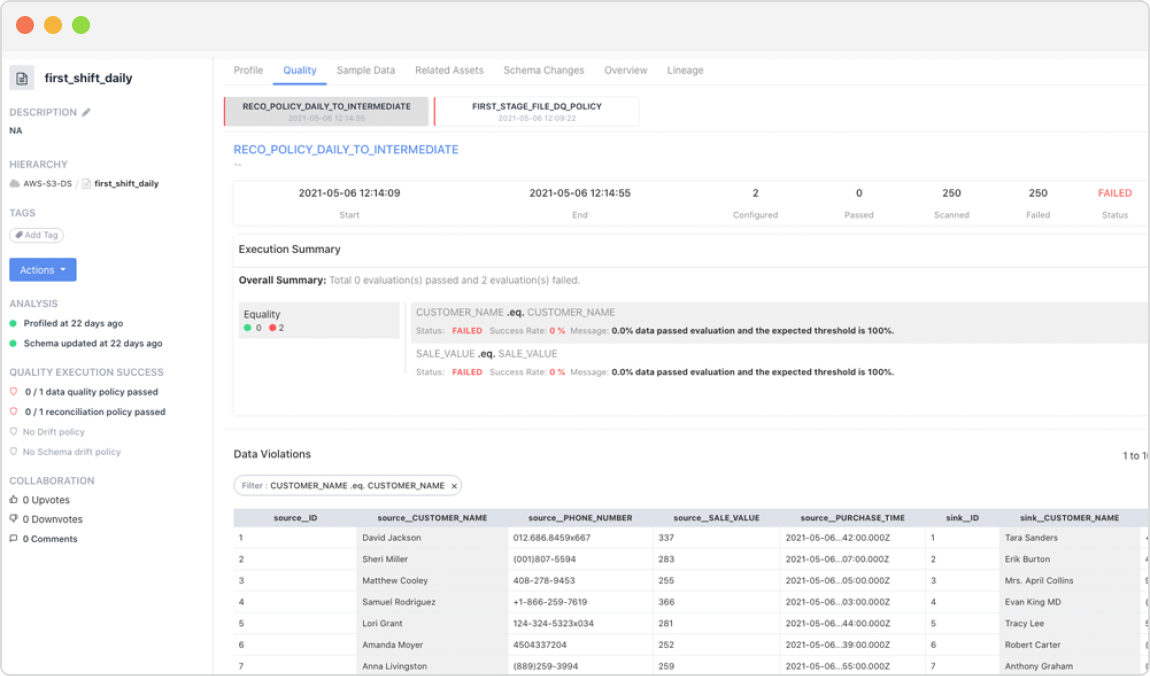

More importantly, Acceldata makes it easy to check the integrity of the data migrated from Hadoop and Snowflake by comparing the source and target datasets. As part of this data reconciliation, Acceldata also helps engineers perform root cause analysis (RCA) on migrated workloads that are not functioning as expected.

For instance, one common issue is Snowflake’s preferred data structure — it automatically divides large tables into micro-partitions. While micro-partitions help Snowflake speed up queries, it differs significantly from the data structures used by Hadoop and HDFS.

There are other more minor issues unique to Snowflake, such as its case-sensitive SQL, that can also wreak havoc on your data schemas and lineage during migration. Acceldata, in particular Acceldata Torch, can catch problems big and small during migration by validating the data in deeper ways over more dimensions than other data quality tools such as Looker. This helps create greater trust that errors haven’t crept into your data, errors that will blow up later on in full view of your executives.

Data Consumption. Once the data is moved and checked for quality, Acceldata helps you rebuild the rest of your data infrastructure. It scans your new Snowflake account to automatically discover and profile your data assets, their structure, their content and how they are related to each other (i.e. dependencies and data lineages). No more manual creation of data catalogs.

We also help you rebuild data pipelines connecting repositories, applications, and ML frameworks, or pipelines oriented around transforming that data.

Data Monitoring. Once your Snowflake infrastructure is created, Acceldata helps monitor it so that it maintains error-free efficiency as data starts flowing through your data pipelines. Acceldata’s multi-dimensional capabilities allow you to test and measure your data quality in many different ways, including for accuracy, completeness, consistency, validity, uniqueness and timeliness, as well as schema and/or model drift.

Our continuous data observability ensures that you have up-to-the-moment information on the state of your data in Snowflake. And rather than bombarding you with false alarms, Acceldata uses thresholds set manually by data engineers or trained via machine learning analysis to smartly notify only when anomalies and incidents become severe. Avoiding the bane of admins everywhere, alert fatigue.

Optimization. Besides providing visibility and operational efficiency, Acceldata provides powerful Cost Optimization tools for Snowflake to aid in your data value engineering initiatives. Besides gathering a plethora of data around usage, performance, and costs, Acceldata provides a way to explore costs, detect spikes and discover their root causes, forecast the costs of your cloud provider’s contract, and also recommend ways for cost reduction.

This makes it easy for companies to accurately rightsize their resources to match workload and SLA requirements and save money, instantly turning your team into cloud data FinOps experts. Acceldata highlights anomalous workloads and provides statistics to help you optimize both performance and cost.

Photo by Anders Jildén on Unsplash

.png)

.webp)

.webp)