Data Observability is becoming an indispensable practice for organizations that build data products and rely on data to drive their operations and decision-making. From data validation and anomaly detection to data reliability and data pipeline monitoring, Data Observability provides organizations with the capabilities to ensure the quality, accuracy, and reliability of their data infrastructure and pipelines, the insights and tools to manage data costs, and much more. By proactively observing, analyzing, and troubleshooting data, organizations can optimize their data pipelines, minimize data errors and issues, and enable data-driven decision-making. Embracing Data Observability is crucial in today's data-driven world to ensure that organizations are leveraging data effectively and maximizing its value for their business success.

In an emerging practice such as Data Observability, knowing the common use cases is critical. In this blog, we will explore common Data Observability use cases. Acceldata partnered with Eckerson Group to summarize these use cases and for an informative whitepaper about Data Observability (see below). Let's dive in!

Data Observability Use Case Categories

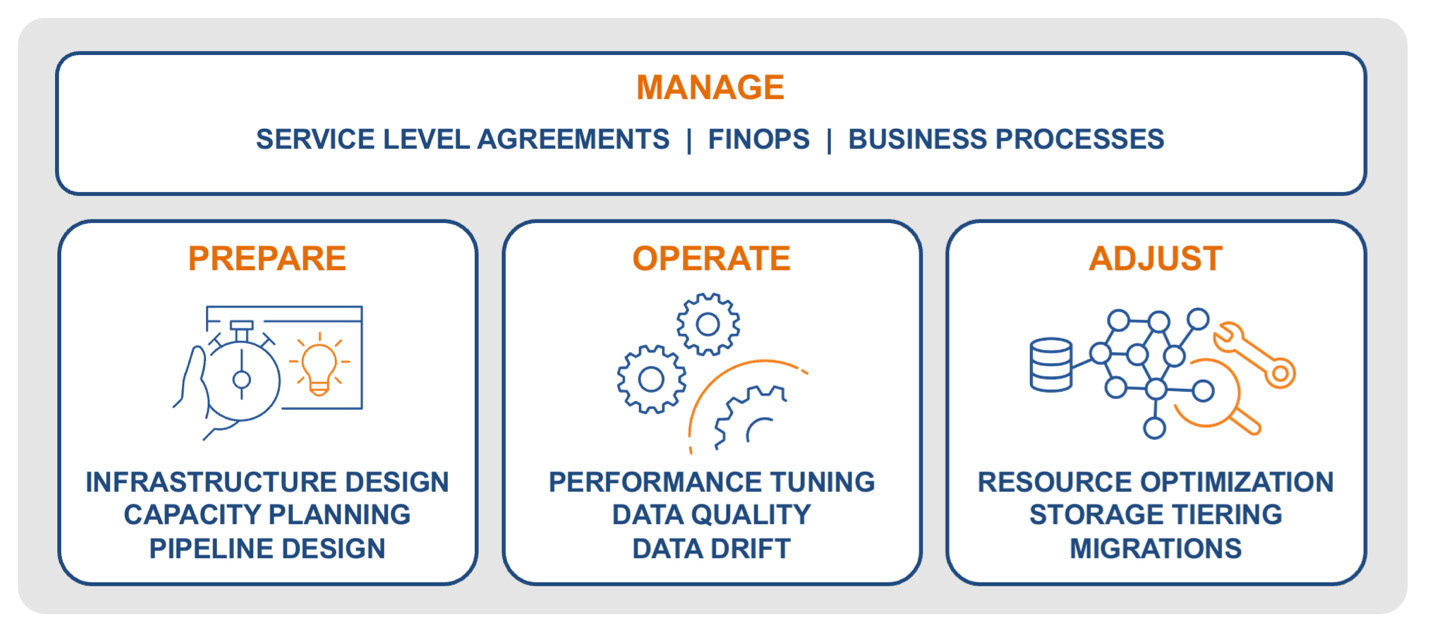

The ten common Data Observability use cases belong to four broad categories.

- Prepare - Data infrastructure design, capacity planning, and pipeline design

- Operate - Data supply chain performance tuning, data quality, and data drift

- Adjust - Resource optimization, storage tiering, and migrations

- Manage - Financial operations (FinOps), cost optimization

1. Prepare

The primary use cases for this category are designing their infrastructure, planning the capacity of infrastructure resources, and designing the pipelines that deliver data for consumption.

Data Infrastructure Design

Data architects and engineers need to design high-performance, flexible, and resilient data architectures that can meet SLAs. To achieve this, they need to understand performance and utilization trends for their infrastructure. Perhaps slow network connections tend to delay ML models for fraud prevention, which increases customer and merchant wait times for large transactions. Perhaps financial analysts have long wait times when building earning reports because business analysts swamp the CRM database with ad-hoc queries.

Data Observability tools help data architects and engineers design infrastructures based on analysis of performance and utilization trends.

Capacity Planning

Once data architects and data engineers select the infrastructure elements for their pipelines, platform engineers must work with CloudOps engineers to plan their capacity needs. They must provision the right resource amounts, maintain healthy utilization levels, and request the corresponding budget.

Data Observability helps in several ways. For example, engineers can simulate workloads to predict the necessary memory, CPU, or bandwidth required to handle a given workload at a given SLA. This helps them define an appropriate mix of resources, with reasonable buffer capacity for growth, and avoid spending money on unnecessary resources. Data Observability helps measure and predict the variance of key performance indicators (KPIs) to define points at which workloads risk becoming unstable.

Pipeline Design

Data architects and data engineers need to design flexible pipelines that can extract, transform, and load data from source to target with the appropriate latency, throughput, and reliability. This requires a granular understanding of how pipeline jobs will interact with elements such as data stores, containers, servers, clusters, and virtual private clouds. Data Observability can help.

Data Observability provides a granular understanding of how pipeline jobs will interact with infrastructure elements such as data stores, containers, and clusters. Data engineers can profile workloads to identify unneeded data, then configure a pipeline job to filter out that data before transferring it to a target. Profiling workloads also enables them to determine the ideal number of compute nodes for parallel processing.

2. Operate

Data Observability also helps data teams operate their increasingly complex environments. The use cases for this category include studying and tuning pipeline performance, finding and fixing data quality issues, and identifying data drift that affects machine learning (ML) models.

Performance Tuning

When a production BI dashboard, data science application, or embedded ML model fails to receive data on time, decisions and operations suffer. To prevent such issues and mitigate their damage, DataOps and CloudOps engineers must tune their pipelines based on health signals such as memory utilization, latency, throughput, traffic patterns, and compute cluster availability. Data Observability tools facilitate this effort, which improves their ability to meet SLAs for performance.

Data Quality

Data quality is the foundation of success for analytics, and Data Observability can help resolve data quality issues before business owners or customers find them. Without accurate views of the business, a sales dashboard, financial report, or ML model might do more harm than good. To minimize this risk, data teams need to find, assess, and fix quality issues with both data in flight and data at rest. The objective is to resolve quality issues before business owners or customers find them.

Data Drift

ML models tend to degrade over time, meaning that their predictions, classifications, or recommendations become less accurate. Data Observability detects data drift—i.e., changes in data patterns, often due to evolving business factors—can cause much of the degradation. These factors might include the health of the economy, price sensitivity of customers, or competitor actions. When they change, so does the data that feeds ML models, which reduces the accuracy of those models. Data scientists, ML engineers, and data engineers rely on Data Observability data drift policies and related insights to identify data drift so they can intervene and adjust the ML models.

3. Adjust

Next, Data Observability helps data teams adjust the data environment. This category includes use cases such as resource optimization, storage tiering, and migrations.

Resource Optimization

Analytics and data teams can make ad hoc changes that lead to inefficient resource consumption. For example, the data science team might decide to quickly ingest huge new external datasets so they can re-train their ML models for customer recommendations. The BI team might start ingesting and transforming multi-structured data from external providers to build 360-degree customer views. DataOps and CloudOps engineers tend to support new workloads like these by consuming cloud compute on demand, which can create cost surprises. Data Observability helps optimize resources to get projects back within budget.

Storage Tiering

While analytics projects and applications require high volumes of data, they often focus on just a small fraction of it. Once trained, an ML model for fraud detection might need just 10 columns out of 1,000—the so-called “features”—to assess the risk of a given transaction. Once implemented, a sales performance dashboard might need just a few fresh data points each week to stay current. In both these cases, the rest of the data remains “cold,” with few if any queries. Data Observability helps data engineers identify cold data and offload it to a less expensive tier of storage.

To illustrate, a Data Observability tool can help inspect and visualize “skew,” which refers to the distribution of I/O across columns, tables, or other objects within a data store. For example, a data engineer might find that just 10% of the columns or tables in a CRM database support 95% of all queries. They might also find that most sales records aged more than five quarters are never touched again.

Migrations

Cloud migration creates the need for many of the use cases described here. In addition, Data Observability can assist migrations themselves. Before migrating analytics projects to cloud platforms, DataOps and CloudOps engineers need to answer some basic questions, such as:

- What does the target cloud environment look like?

- How well will that environment support their analytics tools, applications, and datasets?

- How will their analytics workloads perform in that environment?

Engineers who fail to answer questions like these in advance might derail their migration or undermine analytics results afterwards. Data Observability can help answer such questions and minimize the risks.

4. Manage

Finally, Data Observability helps business and IT leaders fund analytics projects and applications from a business perspective. This category of use cases focuses on the FinOps use case.

Financial Operations (FinOps)

Cloud platforms provide enterprises with financial flexibility, enabling them to rent elastic resources on demand rather than buying servers and storage arrays for their own data centers. But those elastic resources, compute in particular, can lead to budget-breaking bills at the end of the month. Surprises like these have given rise to FinOps.

This emerging discipline helps IT and data engineers, finance managers, data consumers, and business owners collaborate to reduce cost and increase the value of cloud-related projects. FinOps instills best practices, automates processes, and makes stakeholders accountable for the cost of their activities. Data teams use FinOps to make cloud-based analytics projects and applications more profitable, and they need the intelligence of data observability to achieve that.

Get Started with Data Observability Today

Adopting use cases is essential in requirements engineering and system development, enabling organizations to better understand user needs, define requirements, communicate effectively, and design solutions that are aligned with business outcomes.

Download the PDF version of the Eckerson Group white paper “Top Ten Use Cases for Data Observability” and get additional information.

No matter where you are on your data observability journey, the Acceldata platform can help you achieve your goals. Reach out to us for a demo of the Acceldata Data Observability Platform and get started.

Girish Bhat

SVP, Acceldata

@girishb

Photo by Vardan Papikyan on Unsplash

.webp)

.webp)

.webp)