Enterprises of all sizes are recognizing the growing importance of data in driving efficiency across various business and technical processes. Data has become the foundation for decision-making, with insights derived from it shaping the direction of businesses. Consequently, it is crucial for the data used in decision-making to be accessible to the appropriate users at the right moment, accompanied by the necessary level of quality.

The data collection and preparation processes are becoming increasingly complex, posing challenges such as managing performance battles, addressing quality issues, and handling failures in data workflows. It is crucial to prevent these issues and promptly identify and resolve them when they arise to maintain trust in the data used for key decision making. Our primary focus is to ensure trust in decision-making data while also optimizing efficiency and cost.

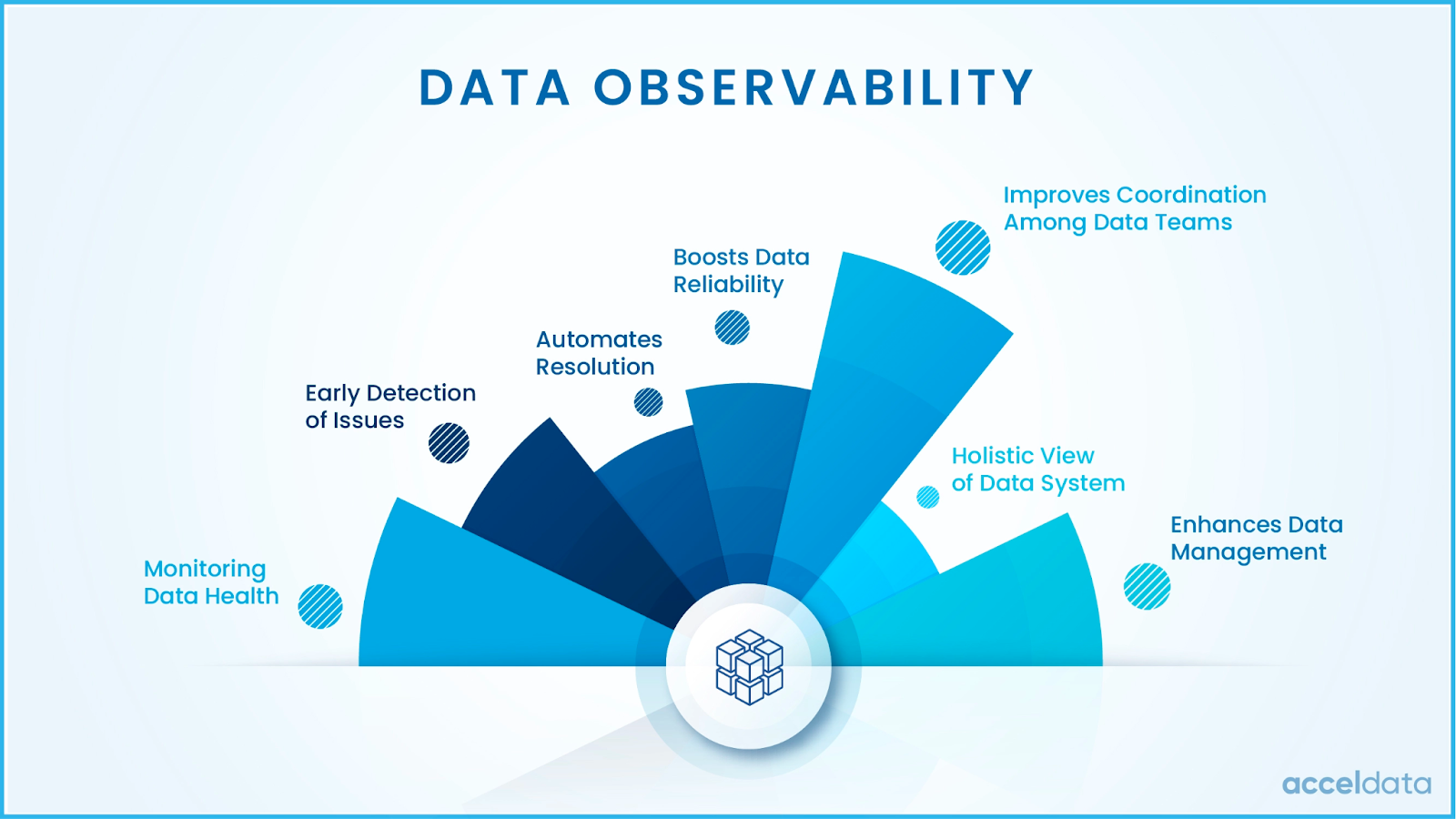

Data observability has emerged as the most important tool in addressing these challenges. It involves the ability to monitor, gain real-time insights, and troubleshoot throughout the data management processes, including data pipelines, data management, data landscape, and data infrastructure. By embracing data observability, organizations can guarantee the quality, integrity, and performance of their data. Now, let's delve deeper into each of these areas.

Data Observability and the Pursuit of Trusted, Accurate Data

Let's begin by focusing on maintaining trust in the data. There are several parameters that can impact this. Firstly, the sheer volume of data flowing into decision-making systems today is significant. Moreover, this data is not limited to internal sources but also originates from multiple external sources, arriving in various formats and at different velocities. This means that some data may be streaming in real-time, while others are received in batches.

Effectively managing and consolidating this diverse data for the right decision-making system becomes a crucial factor to consider. To address this, data quality systems can help mitigate data quality issues. It is essential to continuously monitor the incoming information and be vigilant for any drift that may occur. Drift can manifest in both the data itself and the code handling this data.

These drifts have the potential to cause data to behave in unintended ways and can consequently lead to unexpected business impacts. This is where data observability proves invaluable. Data observability goes beyond merely monitoring and alerting about changes or drifts. It also involves analyzing the root causes behind such shifts and providing recommendations for appropriate actions. This comprehensive approach exemplifies the true power of data observability.

Let's contextualize the use of data in driving an optimal digital customer experience. Imagine a large, global telecom corporation with a complex ecosystem consisting of over 60 digital applications, 500+ microservices, and a mix of cloud and on-premises software. Despite the organization's intentions to leverage this data environment to achieve their business goals, it was causing a continuous stream of errors and delivering inaccurate data, resulting in frequent instances of customer dissatisfaction.

To resolve the issues with data reliability and gain comprehensive visibility into key operational metrics across the systems, the company implemented a data observability solution. This solution captured all data points of the customer journey, mapped them to the system's flow, and correlated the data across the different systems. By optimizing data and emphasizing data reliability, the organization not only addressed these challenges but also achieved a seamless integration of data and business processes. This enabled them to make data available to the right users at the right time, with the highest level of data quality. Ultimately, this data observability approach facilitated and accelerated business transformation.

Behind these improvements, critical activities such as regular monitoring of data pipelines, timely completion and delivery of pipelines, and identification of compute storage bottlenecks took place. By relying on the foundation of data observability, a dedicated data team ensured the effective execution of these activities.

Some may wonder how this differs from the various data quality and infrastructure monitoring tools available today. The key distinction lies in the fact that while these tools excel in their respective domains, they often overlook the underlying data being processed—a crucial aspect of data observability.

An Approach for Effective Enterprise Data Observability

This raises questions about data quality tools and their ability to address data issues across systems. While data quality tools and technologies focus on ensuring accuracy, completeness, reliability, and consistency, observability is primarily concerned with monitoring and understanding the behavior and performance of data systems and processes. This represents a significant difference.

Data quality and data observability are often mistakenly perceived as synonymous. Although "data quality" is an important aspect of both, data observability offers a broader range of capabilities. These include data lineage, data flow monitoring, tracking data transformations in health data pipelines, data access monitoring, and providing transparency across data pipelines. These elements of data observability are instrumental in establishing and enhancing trust in the data that drives decision-making within the organization.

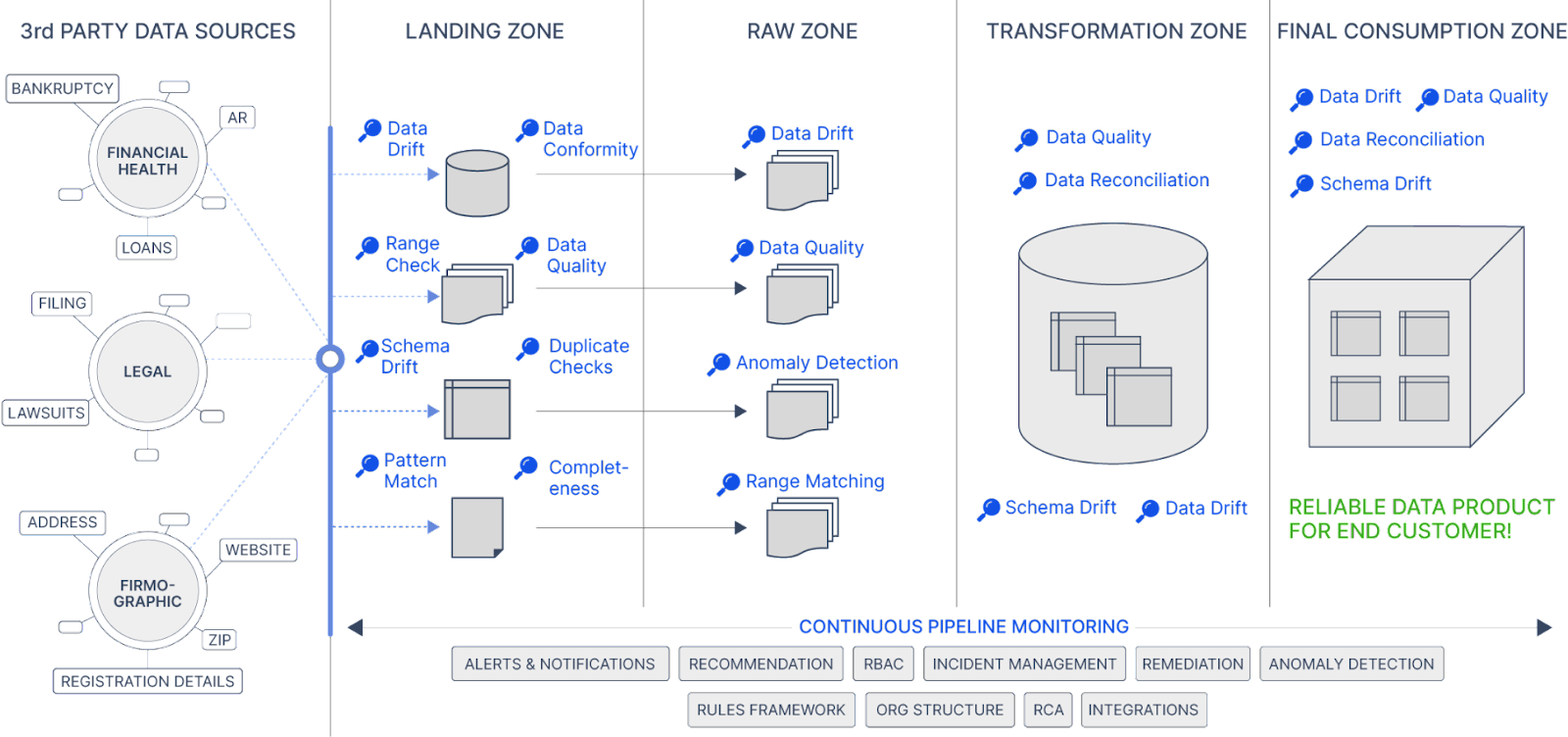

Let's consider a real-world example to further illustrate the concept. Take a typical bank's data environment, which generally consists of three main data zones: a data landing zone, a transformation zone or data layer, and consumption zone. Additionally, a financial services organization will have business-specific data zones, such as payment data stores or compliance data stores, tailored to internal and external requirements. These data stores can be maintained either on-premises, in the cloud, or in hybrid environments.

While this architecture is fairly standard, it introduces additional complexity. Numerous data pipelines are responsible for transforming data across these layers early in the data journey. This activity incurs costs across the layers and data pipelines, including data storage, processing, and consumption. Therefore, it becomes crucial to effectively track and manage these costs to avoid exceeding budgets. Chief Data Officers (CDOs) face the challenge of optimizing spending and implementing a chargeback mechanism. To address these concerns, CDOs are increasingly turning to spend intelligence solutions. By leveraging spend intelligence, they can ensure the best return on investment (ROI) from their data initiatives and scale costs in alignment with changing needs.

An effective data observability solution offers not only insights into spend but also includes spend production modeling, departmental chargeback with usage alerting, and optimal capacity planning. It's important to note that while some data warehouse and other data tools vendors may provide spend insights, they often lack a comprehensive view of the entire data environment, particularly when it involves a combination of platforms. Only a truly comprehensive data observability solution can offer such a holistic perspective.

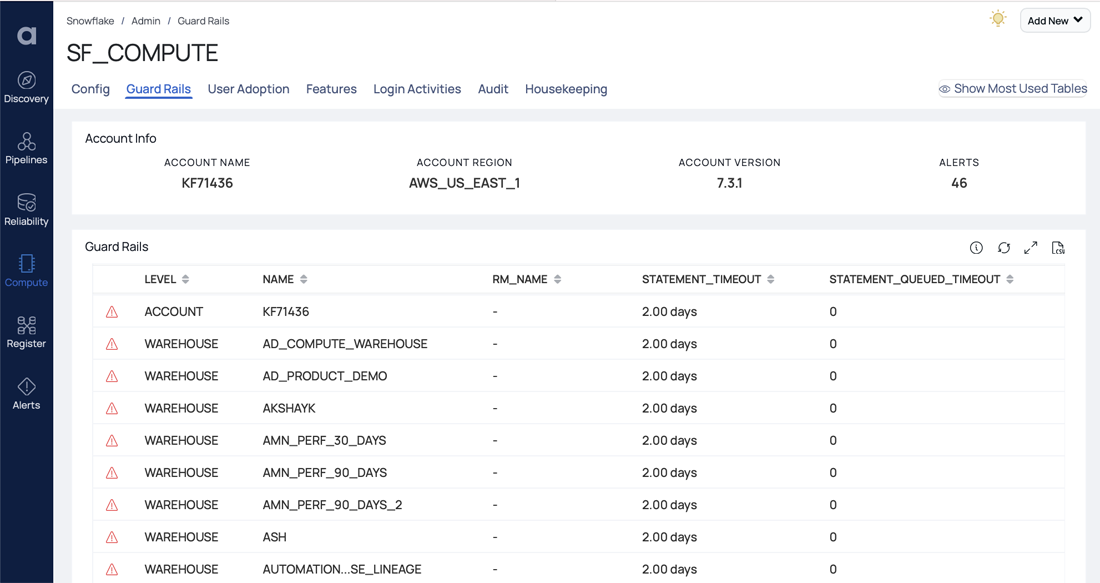

It's worth mentioning that this goes beyond FinOps, although effective data observability solutions do support FinOps efforts. A genuine spend intelligence solution, like the Acceldata Data Observability Platform, not only performs spend optimization functions but also orchestrates data monitoring and operational insights. Handling all these aspects is no small feat. To truly understand where your data budget is being spent or should be spent, you need an approach that considers the financial aspect as inseparable from data activity.

Acceldata goes further by identifying dark, redundant, and whole data assets, all while providing insights into the structure of the entire data architecture. Various factors such as availability, reliability, accessibility, and frequency requirements contribute to significant cost variations. This is another crucial reason why data observability is essential for gaining a comprehensive understanding of data costs.

Photo by Leon Seierlein on Unsplash

.webp)

.png)

.png)

.webp)

.webp)