Data products are built by organizations that recognize the value of-- and ability to productize -- continuously usable and actionable outcomes through data.

It’s not an easy task, but with reliable, high quality data, product developers can be agile in how they approach their goals, and can apply insights from customer usage to guide their product strategy.

The data generated and correlated from business transactions, sales figures, customer logs, or information about stakeholders is critical to business success, whether it’s used for internal purposes or built into a product. If you are serious about building valuable data products, you need a strategy to ensure you are ready to apply your data in effective ways and adapt as you face challenges. It’s a different product dynamic, one where you can't just build, test, and deploy.

Instead, data teams need a framework for identifying the right data, ensuring access to it, and creating an environment which assures it will be reliable and accurate. Hence, there are three key considerations that data teams must focus on when building data products:

User Needs: Your product needs to serve a purpose; this is essential to achieving any level of product-market fit. The beauty in building data products is that users are telling you what they do, and don’t like based on usage. Your data will identify usage patterns, behavioral trends, and other insights from which you can derive your product plans. Start with the notion that users are your beacon, and that they will show you the route to gather sufficient information and insights that foster effective decisions.

Exclusivity: The next step is to determine what is exclusive, differentiating, and valuable about your data product. This can be classified into three sections - breadth, depth, and multiple data perspectives. Breadth gives you visibility across an entire customer or population segment. Depth refers to how exhaustively you are able to study the behaviors of individual people, companies, and processes. By prospecting these topics, you can get the opportunity to predict the future behaviors or find correlations that are not visible to others. Another key part of this is developing perspectives derived from multiple sources of data as relevant. This requires connecting disparate data sources and correlating that data in a way that helps you achieve your product’s purpose.

Build agility into your product: This means continuously identifying new opportunities to serve user needs and pushing new versions that help them achieve those needs. For data product developers, it’s similar to a DevOps style offering additional features that can assist your users to understand customer behaviors, or other data patterns more effectively. This covers predictive and descriptive models that provide insights on how customers might behave in different situations in the future. Additionally, industry expertise, best practices, key metrics, and thoughtful recommendations are some other aspects one can include.

How data observability improves data scaling

Modern companies need to monitor data across many tools and applications, but few have the necessary knowledge of how these tools and applications function. Data observability aids companies to understand, monitor, and manage their data across the full tech stack to diagnose data health throughout the data lifecycle.

Moreover, a data observability platform can help organizations resolve real-time issues using telemetry data such as data- logs, metrics, and traces. And, for organizations with many unlinked tools, observability monitoring can further assist IT teams obtain acuity into system performance to step-up processes. One of the other vital benefits of data observability is that it helps to simplify root cause analysis. It permits teams to actively debug their systems and gain insight on how data interacts with different tools and operates the IT infrastructure.

With this comprehensive view, your company can maintain high data quality, strengthen data integrity, and secure data coherence across the entire pipeline. This makes it possible to deliver on service-level agreements (SLAs) and operate with relevant information that can be analyzed and applied for effective decision making.

Moreover, by tracking performance of data throughout data pipelines, you can prevent data downtime and administer consistent health across your IT systems. Therefore, duration, pipeline status, and identification of specific issues offer more insight into the health and staging of your data pipelines so you can steadily examine the observability your organization needs. Column-level and row-level profiling, and anomaly detection offer insights into data performance across your network.

Collectively, these observability metrics proffer comprehensive insight into your system’s health, and they identify potential issues that could impact individual elements of your system, and the quality of your data. Many organizations start with identifying the right data observability platform for their needs to bring data together across their full tech stack. Yet, executing data observability goes beyond the tools you use. To device data observability efficiently, you should start with an observability strategy to equip your team for the result observability will have on their workflows.

It is good to set-forth by developing a data observability strategy and framework that guides in constructing a data observability framework. The next step is to organize your team to embrace and execute with a culture of data-driven collaboration, and how adopting a new observability tool can help to combine data across disparate systems.

Next, develop a standardization library to define what good telemetry data looks like. This will support your team standardizing metrics, logs, and traces across data lakes, warehouses, and other sources to logically integrate into your data observability tool. While you’re ensuring data standards, you can start developing governance rules like retention requirements and data testing methods to detect and delete bad data prudently.

Finally, choose an observability platform that works for your organization and integrates data sources into that platform. You may need to build new observability pipelines to access the metrics, logs, and traces needed to gain end-to-end visibility. Add relevant governance and data management rules for your data, then correlate the metrics you’re tracking in your platform with your preferred business outcomes. As you detect and approach issues through your observability platform, you can discover new ways to automate your security and data management practices, too.

How to choose the right data observability platform

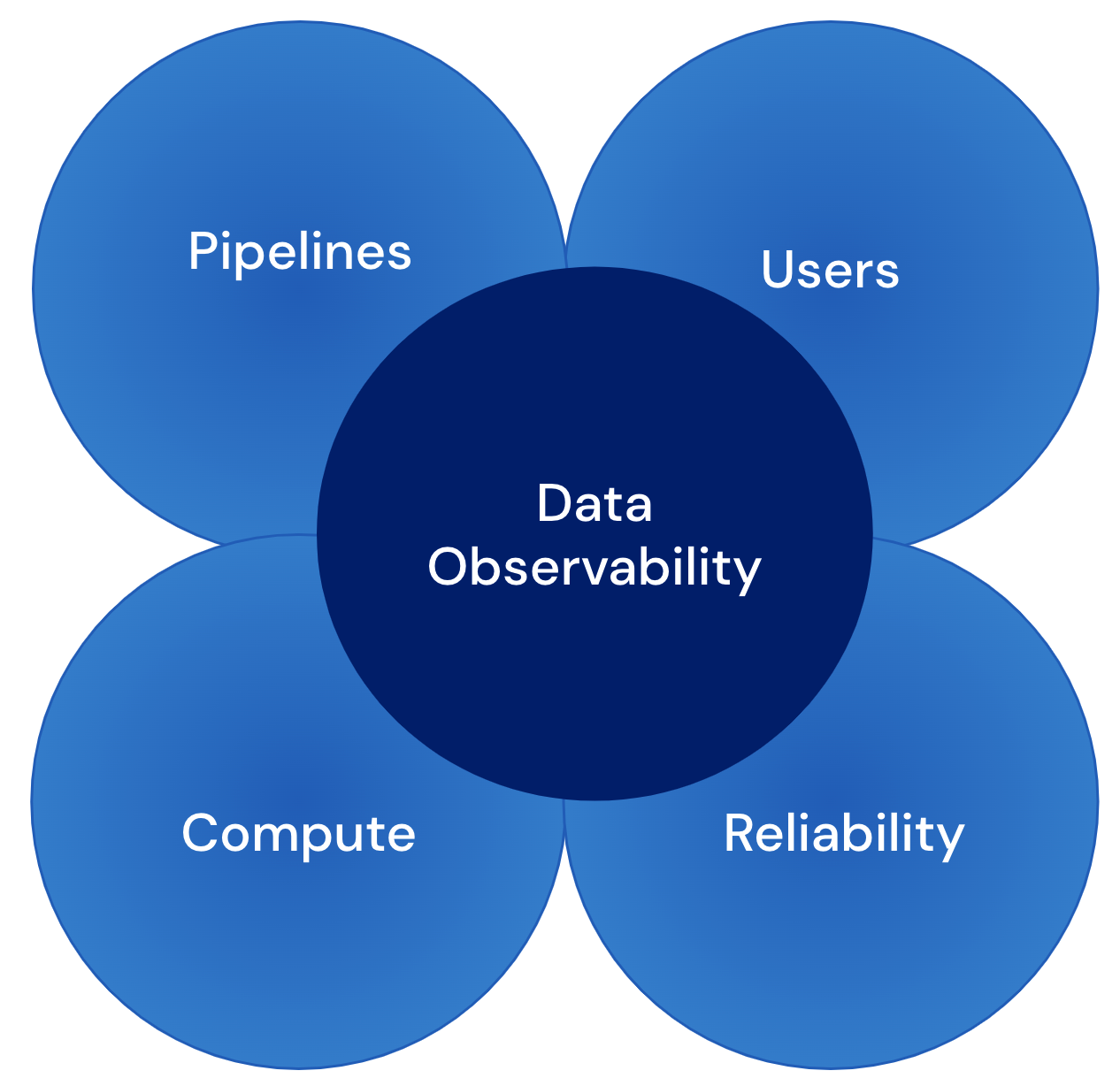

At its core, the foundation of a good observability platform is dependent upon the following layers - compute and infrastructure; reliability; pipelines; and users.

With compute and infrastructure observability you have the ability to understand your consumption, predict and eliminate outages, manage your spend. Data 'reliability' looks at the completeness and accuracy of data, as well as its consistency across time, and sources. The consistency factor is very important, as data needs to be consistent to be truly reliable, that way it can be trustworthy. Pipeline observability monitors and manages all of your telemetry pipelines at scale. Chief Data Officers, Data Engineers, Platform leaders, IT leaders need to understand business issues, including user experience obstacles, and be able to propose technology solutions.

The best observability tools go beyond these efficiencies to automate more of your security, governance, and operations' practices. Plus, they offer affordable storage solutions so your business can continue to scale as data volumes grow. Now when your data volumes see an average growth month-on-month, it’s critical to examine how an observability tool can help your company’s existing needs in a congruous way. To select the right data observability tool, one can start by investigating their current IT architecture and finding a tool that integrates with each of their data sources. Look for tools that monitor your data at rest from its current source—without the need to extract it—alongside surveilling your data in motion through its entire lifecycle. From there, consider tools that incorporate embedded AIOps (artificial intelligence for IT ops) and intelligence alongside data visualization and analytics. This permits your observability tool to pedestal business goals more effectively as well as IT needs.

Your organization handles huge volumes of valuable data every day. But without the right tools, managing that data can use-up excessive time and resources. Growing data volumes make it more important than ever before for companies to find a solution that devises and automates end-to-end data management for analytics, compliance, and security needs. Acceldata's data observability solution makes it possible for data teams to build the right data products.

Contact us to learn more about how data observability can support your organization.

Photo by Thanos Pal on Unsplash

.png)

.png)

.webp)

.webp)