The task of optimizing a cloud data environment of any size is a challenge that never stops for data teams. Even though they provide countless benefits, cloud services are expensive and can be complex - it’s a fact of operating a modern data environment. They are also in continuous motion and data leaders cannot afford the time required to manually analyze the entirety of their data operations and cloud spend.

There’s no getting around the fact that a cloud data platform delivers so many advantages to enterprises, but the positives come with a lot of operational and cost complexity. Consider the issue of cloud sticker shock; ZDNet reported that 82% of organizations running cloud infrastructure workloads have reported incurring unnecessary expenses.

Additionally, Gartner predicts that 60% of infrastructure and operations leaders will face public cloud cost overruns. Unexpected and unnecessary data-related expenses often arise in enterprise data operations due to the nature of operational complexity in the modern data stack. What started as a minor miscalculation or operational oversight can quickly escalate into millions of dollars in avoidable expenses. Add to this the critical need to establish and maintain data reliability over all data pipelines and sources. With it, operations run smoothly and issues are minimized. Without data reliability, data teams may end up spending more time putting out fires than building an effective data platform.

Data leaders are turning to data observability to deliver deep insights about cloud environment operations and costs. The premise of data observability is that it reveals intelligence about the state, activity, and potential of data, and enables data teams to rapidly remediate issues that have been discovered. Spending is clearly tied to all this data activity, so having a way to identify spend-related issues is now a requirement.

Reliability issues, downtime, unnecessary expenses, hidden costs, scaling, and utilization can all be understood with numbers, but when delivered with a forensic lens – which data observability provides – there is context and meaning that helps data leaders reduce the potential for fragility within their data. That equips data teams to move from “Hmmm, seems like we’re paying too much,” to, “We can save XYZ% by reallocating cloud expenses accurately across multiple departments, eliminating services we’re not using, and cleaning up old repositories that aren’t needed.”

How Data Observability Strengthens Cloud Data Environments

Irrespective of the size of the enterprise, as their data operations scale, data pipelines tend to become increasingly fragile. While that’s not a secret to data engineers, the degree of their fragility, the location of gaps and issues, and their impact are mostly left hidden. When data teams lack data observability, they can’t anticipate and prevent potential pipeline issues. Consequently, enterprises may experience unforeseen periods of data downtime, which can lead to customer attrition and missed revenue prospects. By implementing data observability, organizations can connect their entire data infrastructure and proactively identify and avert data downtime problems.

The philosopher and former options trader, Nicholas Nassim Taleb, speaks about the concept of anti-fragility (his treatise on the subject, Antifragile, is a fascinating and wide-ranging look at the impact of fragility on systems and attempts to make them more robust) as a state where systems, products, humans, and just about every type of entity is girded against stressors because they can adapt, remediate, and become stronger as a result. This is a perfect way to describe what data observability provides to enterprises that require massive budgets to operate their data infrastructures, but want to continuously improve by being fiscally smart without any adverse impact to their data outcomes.

The antifragility provided by data observability is a result of having a comprehensive view of all data systems throughout the data lifecycle. But data observability isn’t simply showing more or uncovering at a deeper level. There’s a great deal of orchestration that happens, which includes:

- Correlating errors with events across multiple data pipelines

- Identifying data-related issues and analyzing their underlying causes

- Enhancing data quality, streamlining data pipelines, and minimizing infrastructure expenses as operations expand

- Obtaining deeper insight into the robustness and dependability of data systems, beyond just aggregate data.

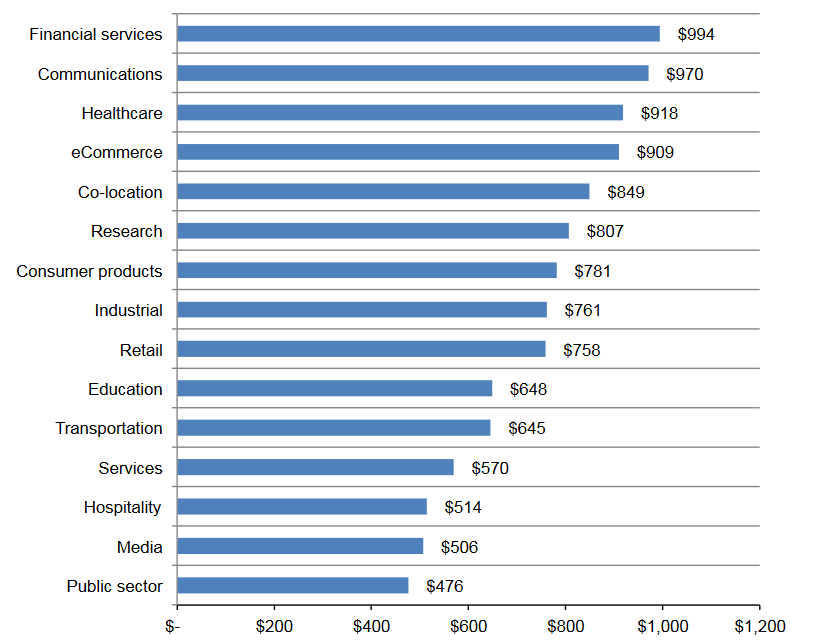

At Acceldata, we’re solving for these and other issues with advanced AI/ML capabilities, which enable predictive capabilities that can prevent data outages. Just preventing data downtime can have a huge positive impact on an organization's bottom-line, as downtime results in both a) data teams having to work extensively to fix the problem and b) lost revenue opportunities due to customer churn. In fact, according to the Ponemon Institute, the average cost of a data downtime outage for enterprises is nearly $750,000. For certain industries like financial services, communications, and healthcare, the costs can soar to almost one million dollars per outage.

Even one outage can cost your enterprise dearly. And it’s not uncommon for enterprises to face at least one or two outages a year as they scale.

Operational Excellence and Cloud Cost Savings

Enterprises often encounter unanticipated issues due to the complexity of their data operations, and errors can swiftly spiral into avoidable expenses reaching millions of dollars. Adopting data observability can help mitigate such problems, whether in the form of addressing data downtime or dealing with software and hardware costs. With a cost equivalent to that of a full-time employee per year, data observability can yield over 80 times its return on investment for your enterprise.

Managed data infrastructure solutions offered by proprietary providers like Oracle Database, Azure SQL, and Amazon Relational Database Service (RDS) can offer significant cost savings by eliminating the need for manual upkeep and maintenance while providing scalability and agility. However, this may not be the case for large enterprises that already serve hundreds of thousands of users and need to scale further. In industries like financial services, healthcare, and communication, enterprises can face several million dollars in annual data infrastructure-related costs.

Data observability offers a solution by helping enterprises switch to open-source technologies and reducing their software/solution costs. The cost of using proprietary managed solutions is significant enough for VC firms like Andreessen Horowitz to make a case for cloud repatriation, with potential savings of up to 30% to 50% of total cloud costs. The opacity of costs associated with using proprietary managed software solutions, with cloud infrastructure bills based on usage factors that aren't easy to understand or predict, adds to the problem.

Fortifying Cloud Environments & Reducing Cloud Costs

Acceldata's data observability platform offers a way for enterprises to save money by avoiding unnecessary software licenses and solution costs. Companies like PubMatic and PhonePe have benefited significantly from Acceldata's solutions with huge savings in their software licensing costs. PhonePe alone estimates savings of at least $5 million in annual software licensing costs due to the spend-related issues they uncover with the Acceldata platform.

Take a demo of the Acceldata data observability platform to see how we help clients reduce operational complexity and reign in costs from their cloud environments.

Photo by Jason Weingardt on Unsplash

.png)

.png)

.webp)

.webp)