Observability is a hot topic for anyone in IT and devops tasked with overseeing the performance and health of enterprise systems and applications.

You might think that Data Observability is simply a subset of Observability, focused on monitoring and managing databases and data warehouses.

You would most certainly be wrong. Performing an objective, side-by-side comparison of data observability vs monitoring tools shows why.

Observability has a long history going back to the 1960s “space race” era, while Data Observability has become critically important in just the past several years.

Observability and Data Observability tools help very different people within enterprise IT and promise different outcomes. Specifically, Data Observability helps Data Engineering teams.

While they share methodologies, they deliver on different goals.

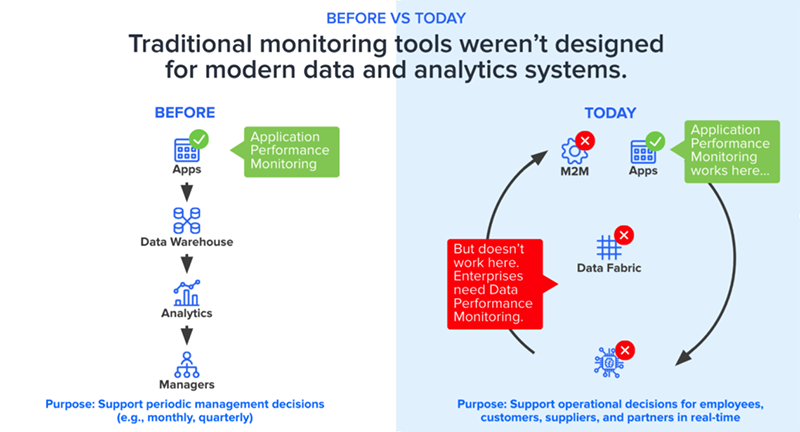

In particular, observability tools such as Application Performance Management (APM) only scratch the surface of what is happening in the data realm.

As a result, they cannot match the visibility, control, and optimization capabilities provided by true Data Observability tools when it comes to supporting today’s mission-critical, real-time analytics and AI applications.

Let’s dive more deeper into true data observability vs observability and why the differences exist.

History

If you were an IT manager in the Seinfeld era, you may remember observability discussed in the context of the IT Operations Management tools that were emerging in that timeframe. ITOM vendors were reacting to two major 90s trends:

- The shift from mainframe to client-server computing

- The rise of the Internet

ITOM tools such as IBM Tivoli, HP OpenView, CA Unicenter, Microsoft System Center, and BMC Patrol, were a huge boon, providing the ability to monitor, manage, configure and secure data centers.

But ACTUALLY (and apologies for being that guy), observability goes back further.

The term was invented by Hungarian-American scientist Rudolf Kálmán, who is best-known for inventing a signal-filtering algorithm that NASA used for communicating with its Apollo moon landers and Space Shuttles.

Because he’s just that awesome, Kalman also wrote a seminal paper giving a formal definition of observability: how well the internal states of a system — whether electronic machine, robot, or computer — can be measured through external outputs (such as sensor data).

Kalman’s definition provided the theoretical basis for control theory, which underpinned the IT field of systems management.

Systems management has evolved for every era, from mainframe system monitoring tools in the 1960s and 1970s, ITOM client-server tools in the 80s/90s, and APM tools that arose in the 90s/00s with web applications.

The latest generation of APM tools--think Dynatrace, AppDynamics, and New Relic--arose with cloud computing in the last decade.

These modern APM tools claim to offer end-to-end observability by throwing around terms such as big data, machine learning, etc.

However, APM stands for application performance management for a good reason. APM tools have an application-centric view rooted in technology almost 20 years old in some cases.

Broadly speaking, Data Observability is a continuation of the observability tradition in IT, following the same basic methodology.

However, the devil is in the details — two key details. First, Data Observability tools arose in response to businesses in the past half-decade striving to become data-driven digital enterprises. Digital businesses are trying to extract more information from the data they collect and process at any given time. And they want to do so by empowering as many employees as possible, not just BI analysts or data scientists, to consume, or benefit, from that data. The cutting-edge of modern data systems includes real-time analytics, citizen development and self-service analytics, machine learning, and AI.

Second, to serve these needs, Data Observability tools focus on visibility, control and optimization of distributed, diverse data infrastructures. These include cloud-hosted open-source data clusters and real-time data streams, as well as legacy on-premise database clusters and data warehouses.

Other observability tools, including traditional APM, predate this shift to the data-driven enterprise. They are fundamentally ill-equipped to support the complexity of distributed data infrastructure nor can they help data engineers manage and optimize their data pipelines or meet Service Level Agreements.

Focus

APM observability remains focused on providing telemetry on monolithic and microservice applications which are rarely data intensive and distributed. Their sweet spot is providing alerts about issues with a web site, tracking middleware performance, dealing with a request-response system, and maybe a few microservices.

What creates trouble for APM observability tools is dealing with the underlying abstractions of complex data systems. They fall flat if you ask them to track the acyclic graph of a compute engine or monitor a complex streaming system like Apache Kafka. And they don’t delve into the multiple layers of the modern data infrastructure, which includes the engine/compute level, the data level (where reliability and quality are determined), and the business layer (for data pipelines and costing).

True Data Observability tools can and do support managing, monitoring, and remediating — often automatically — all of the different layers of complex, distributed data systems. In particular, Data Observability provides holistic insight and control into your business’s data pipelines from a single pane of glass.

Data pipelines are the new atomic unit for success for Data Observability. When you measure and manage data pipelines, you gain control over data in motion AND data at rest, allowing you to track data performance end-to-end, and fix and prevent bottlenecks, overprovisioning, and wasted IT labor — all while meeting SLAs.

For sure, APM observability vendors claim to be increasing the telemetry data that they gather. But today’s distributed infrastructure is so massive that APM tools still only scratch the surface of your data infrastructure.

Not even so-called full-stack observability (FSO) tools from APM vendors dive deeply enough into the data realm to empower data engineers and data ops teams. And that’s key.

Users

Twenty years ago, IT was all about PCs and networks. Today’s IT teams are dominated by two fast-growing camps: those focused on supporting applications; and those focused on supporting data.

APM observability serves the application camp, which include engineers and teams in the areas of devops, site reliability, cloud infrastructure, and closely-related IT roles.

Data observability tools focus first on the needs of data engineers, data architects, chief data officers, as well as data scientists and analytics officers. This is a smaller, newer but faster-growing group. Data is becoming more mission-critical, and more businesses are digitizing. As data engineering teams grow, they need Data Observability tools to ensure they can meet the demanding SLAs of their business peers. That’s because...

Impact

Conventional observability tools lack the capabilities to help data engineers efficiently manage today’s embedded analytics, especially mission-critical ones. Only true Data Observability tools can.

Don’t be lured in by one-dimensional point solutions. They either focus on one task — data monitoring — or work for only one platform, such as one cloud native DWH. For maximum control and efficiency, data engineers need a full-fledged, multi-platform Data Observability solution that provides a holistic, single-pane-of-glass view of their entire data ecosystem.

That solution should provide strong management, predictive analysis of data pipeline issues, and automation capabilities needed for efficient performance management — capabilities that application-centric, data monitoring, and one-dimensional point solutions all lack.

Data Observability vs Monitoring: See the Difference for Yourself

Acceldata has created the only true Data Observability solution available today. It includes three key components — Pulse, Torch, and Flow — that together provide the most-comprehensive, end-to-end Data Observability platform on the market. Acceldata’s Data Observability platform provides visibility into the compute, data, and pipeline layers to help enterprises observe, scale, and optimize their data systems.

Discover more about Acceldata at www.acceldata.io.

.webp)

.webp)