We all know there is bad data lurking somewhere in our organizations and our data supply chains. The key is to make sure we find and fix the bad data before its negative impacts result in bad decision-making and have a detrimental effect on revenue, customer relationships, and business processes.

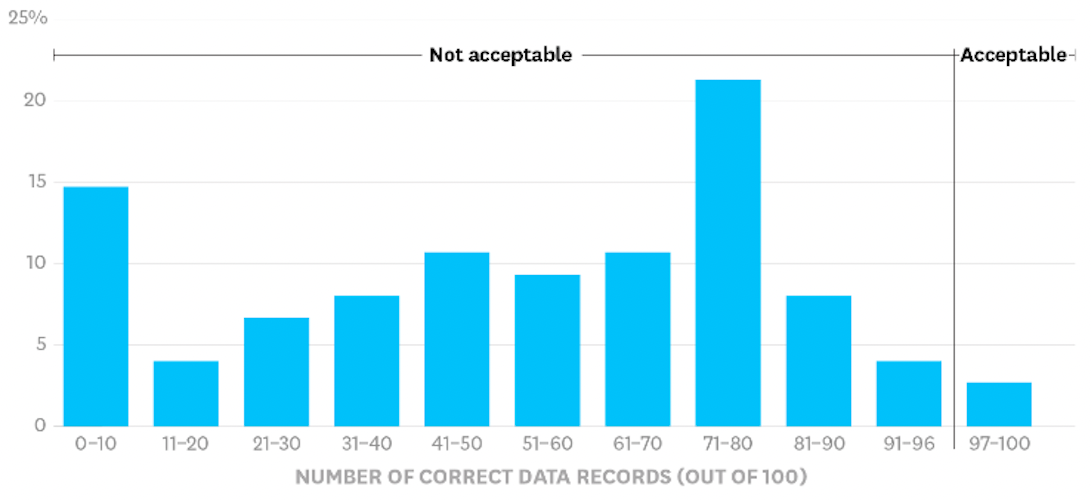

As you can see in the chart below from the Harvard Business Review, only 3% of enterprise data is considered to be acceptable (quality score of 97+) and 15% to be mostly accurate (quality score of 80+). The sad reality is that data teams will inevitably encounter bad data at some point.

Common Types of Bad Data

Companies can possess various types of bad data – inaccurate, incomplete, or unreliable information that can negatively impact decision-making and business operations. Some common types of bad data include:

- Incomplete Data: Data that lacks essential information, fields, or attributes. This will skew analysis and incorrect conclusions.

- Inaccurate Data: Data that contains errors, inaccuracies, or inconsistencies. This can result from human error during data entry, technical glitches, or outdated information.

- Duplicate Data: Multiple instances of the same data entry in a dataset. This creates overestimation, redundancy, and skewed analytics.

- Outdated Data: Data that is no longer relevant or reflective of the current situation. Relying on outdated data leads to uninformed decisions.

- Non-Standardized Data: Data that is inconsistent in format, structure, or units of measurement. This can make it challenging to perform accurate analyses or comparisons.

- Biased Data: Data that contains inherent biases due to the sampling methods used, the sources of data, or the demographics represented. This can result in skewed insights and unfair decision-making.

- Missing Data: Data points that are simply absent from the dataset. This can create gaps in analysis and incomplete conclusions.

- Inaccessible Data: Data that is stored in a way that is difficult to access, making it challenging to retrieve and use effectively.

Addressing and mitigating these types of bad data is essential for maintaining data quality, making informed decisions, and ensuring the accuracy of business operations and analyses.

The Impact of Bad Data on Engineering Costs

There is a principle in software development that's often referred to as the 1 x 10 x 100 rule (sometimes dubbed the 10 x 10 x 10 rule). This principle pertains to the expenses associated with rectifying issues at different stages of the process. Essentially, it posits that addressing and resolving a problem during the development phase incurs a cost of $1. However, this cost escalates to $10 if the problem is identified and tackled during the QA/staging phase, and further skyrockets to $100 when the problem is detected and resolved after the software has been deployed into production.

This same rule can be extended to the domains of data pipelines and supply chains. Applying it here signifies that addressing and rectifying an issue within the landing zone incurs a cost of $1, whereas the cost increases to $10 if the problem is pinpointed and resolved within the transformation zone. Should the problem persist until the consumption zone, the cost balloons to $100 to detect and rectify it.

A recent survey sheds light on the fact that data engineers allocate approximately one week per month, roughly a quarter of their time, to addressing incidents pertaining to data quality. Considering an average annual salary of $140,000, this translates to a direct expense of $35,000 per engineer for rectifying flawed data. Moreover, an equivalent sum is lost in terms of missed opportunities stemming from this issue.

How Bad Data Impacts Business Costs

Bad data can have significant business impacts on a company in a variety of ways, including:

- Poor Decisions and Actions: Inaccurate, incomplete, or outdated data results in poor decisions for strategic planning, marketing campaigns, resource allocation, and much more leading to wasted costs.

- Missed Sales Opportunities: Inaccurate customer data could lead to missed sales and wasted marketing costs while poor market data could lead to missed market opportunities.

- Reputation Damage: Bad data that leads to incorrect communications, inaccurate product information, or mishandling of customer data can create negative perceptions and revenue erosion.

- Wasted Operational Costs: Inaccurate data leads to operational problems with wasted costs or missed sales opportunities from overstocking, stockouts, or supply chain disruption.

- Regulatory Compliance Issues: Bad data within regulatory reporting and compliance processes can lead to legal and financial repercussions, including fines and lawsuits.

- Customer Experience: Poor customer data can negatively impact customer experience frustrating customers and leading to lost future customer value.

- Lack of Innovation: Innovation often relies on accurate data analysis to identify emerging trends and customer preferences. If the data is bad, the company may struggle to identify these insights, leading to a lack of innovation and falling behind competitors.

- Long-Term Financial Impact: The cumulative effect of bad data over time can be substantial. From missed opportunities to decreased customer loyalty, the long-term financial impact can be difficult to quantify but is nonetheless significant.

Business Processes that Are Impacted by Bad Data

Bad data can have a significant impact on various business processes. Here are some key business processes that can be negatively affected by bad data:

- Strategic Planning: Bad data in analytical models and reports will lead to poor strategic planning and resource allocation.

- Customer Relationship Management (CRM): Bad data for CRM can result in incorrect customer profiles, inaccurate contact information, and missed opportunities for engagement that leads to lost sales and revenue.

- Supply Chain Management: Bad data can disrupt the supply chain by causing errors in inventory levels, procurement forecasts, and demand planning driving up operational costs and potentially leading to lost revenue.

- Financial Reporting: Inaccurate financial data can lead to incorrect financial statements and misinformed investment decisions hindering the financial operations of the organization

- Marketing and Sales: Poor customer targeting and ineffective marketing campaigns result from inaccurate data, and that lead to lost sales and wasted marketing costs.

- Quality Control and Manufacturing: Bad data in quality control processes results infaulty product specifications, incorrect measurements, and defective products which can result in recalls, customer dissatisfaction, and higher costs.

- Compliance and Risk Management: Bad data can lead to non-compliance with regulations and incorrect risk assessments resulting in fines and lawsuits.

- Logistics and Transportation: Bad data increases errors in shipping addresses, incorrect routing, and delays in delivery increasing logistics costs and creating dissatisfaction with customers.

- Healthcare and Life Sciences: In sectors like healthcare, bad data can have serious consequences, including misdiagnoses, incorrect patient information, and compromised patient care that leads to higher costs and potential lawsuits.

- Energy and Utilities: Bad data in energy and utility sectors results in inefficient energy consumption, inaccurate billing, and disruptions in service delivery that drive up costs.

Mitigating the Impact & Cost of Bad Data

A comprehensive data reliability solution (which includes data quality) identifies bad data and data pipeline issues, alerts teams to data incidents, and quickly remedies data problems. This improves the reliability and overall health of your data, builds data trust, and leads to better business decisions and outcomes.

The Acceldata Data Observability Platform has a comprehensive data reliability solution that maximizes data quality and eliminates data outages and incidents. With Acceldata, data teams get detailed insights and tools that identify, remediate, and prevent data problems.

Acceldata also enables the ability to “shift left” your data reliability, allowing you to detect bad data earlier in your data pipelines that make up your data supply chain. This allows you to detect, isolate, and quarantine bad data within your data pipelines, get alerts when problems occur earlier in the process, and insert circuit breakers to stop pipelines when bad data is detected. It prevents bad data from reaching data consumers so they are always working with high-quality data they can trust to make agile accurate decisions and drive better business outcomes.

See how you can improve your data reliability and reduce the cost of bad data by scheduling a personalized demo.

Photo by CHUTTERSNAP on Unsplash

.png)

.webp)

.webp)