If data is the lifeblood of enterprises, then poor data is the illness that costs enterprises, on average, $15 million every year.

Many enterprises recognize the need to improve data quality, but most don’t acknowledge that improving data quality isn’t a one-time activity. Nor is the scope of the issue limited to data teams alone.

To successfully use data for better business outcomes, enterprises need to bake data quality best practices into their operations. That requires a data observability solution that allows data teams to understand their data at a granular level, enables them to optimize their data supply chains, scale their data operations, and ultimately, continuously deliver reliable data.

Data observability can help data teams align data operations with key business outcomes. It provides a single, unified view into data, processing, and pipelines at any time and point in the data lifecycle. It can automatically detect data drift and anomalies from large sets of unstructured data, and provides clarity on the state of an enterprise’s data, and the systems that transform data.

Enterprise data teams need to develop and adhere to processes that will enable them to optimize their data operations. They should initiate this effort with the following best practices.

Align Data Operations to Meet Business Needs

Organized, thoughtful enterprise data and business teams seek an alignment of technology operations with business needs but setting up the necessary processes to ensure reliable outcomes typically requires managing a variety of disparate tools and processes. Without the right tools, the task is impossible; manually measuring and tracking data metrics can cost data teams a lot of time and effort. So, teams don’t track or review whether data operations help meet business needs or not. Data observability can reduce this drudgery.

Data observability helps data teams improve performance and lower costs

Data observability can help data teams monitor workloads as well as identify constrained and spare resources. AI-driven data observability features can also predict future capacity requirements based on available capacity, buffer, and expected growth in workload.

These aren’t futuristic, theoretical predictions. Today, all types of enterprises already use a multi-layered data observability solution like Acceldata. As a result, they’re able to clock higher turnovers and reduce infrastructure costs — amounting to over a 1,000x return on their data observability investment.

PhonePe, a Walmart subsidiary, uses Acceldata to scale its data infrastructure by 2,000% and save over $5M every year.

Acceldata can deliver equally effective results to an established ad-tech firm like PubMatic, which serves over 200 billion daily ad impressions and receives over 1 trillion ad bids every day. With Acceldata, PubMatic saves millions of dollars every year by optimizing their HDFS performance, consolidating Kafka clusters, and significantly reducing their cost per ad impression (their most critical business outcome).

Data Observability Across Data Pipelines and the Entire Data Lifecycle

As business operations become more customized, sophisticated, and nuanced, data teams need to create complex data pipelines that integrate solutions with various functionality. This results in more potential points of failure.

Today, data pipelines need to ingest data from structured, semi-structured, and unstructured databases. In addition, they need to use online repositories, third-party sources, or a combination of the two. They also use a combination of data warehouses, lakehouses, and query services such as BigQuery, Databricks, HBase, Hive, or Snowflake to store and make sense of the data. Furthermore, they may use Amazon S3, HDFS, or Google Storage to store the data and use applications like Tableau or Presto to present the data.

There's no doubt that these technologies help data teams quickly stitch together complex data pipelines. However, they also result in fragmented and partial views of data pipelines. This, in turn, may lead to unexpected data and behavior changes. And as any data engineer or scientist can attest to, this adds complexity to data operations, especially if it occurs in a mission-critical pipeline during production.

Data observability offers a unified view of the entire data pipeline across technologies

More than ever before, data teams need a single, unified view of their entire data pipeline across all technologies. Data teams can’t improve data quality until and unless they go beyond fragmented views and get a holistic view of how data is transformed across the entire data lifecycle.

A data observability solution like Acceldata Torch can predict, prevent, and resolve unexpected data downtime or integrity problems that can arise from fragmented data pipelines. It automatically monitors data centrally to evaluate data fidelity. It ensures data quality is retained, even after data gets transformed multiple times across several different technologies. It can track data lineage to make sure the data is trustworthy so data teams needn’t pull all-nighters resolving urgent data escalations.

True Digital, one of Thailand’s biggest communications companies, uses Acceldata to solve significant performance and scalability problems. For instance, they weren’t able to process nearly 50% of data beyond the ingestion stage. With Acceldata, they were able to get a unified view of their entire data pipeline and resolve their performance problems. They eliminated all unplanned data outages and SEV1 issues. In addition to this, they were able to scale their data infrastructure and at the same time save more than $3M every year.

Use AI to Detect Data Errors, Reconcile Data, and Detect Data Drift

With the increasing volume, velocity, and variety of incoming data, relying exclusively on manual interventions to improve data quality is akin to looking for a needle in an ever-expanding haystack. A top-end data observability solution can leverage AI to automatically flag errors, unexpected data behaviors, and data drift. This narrows down the problem scope and helps data teams to effectively resolve data problems.

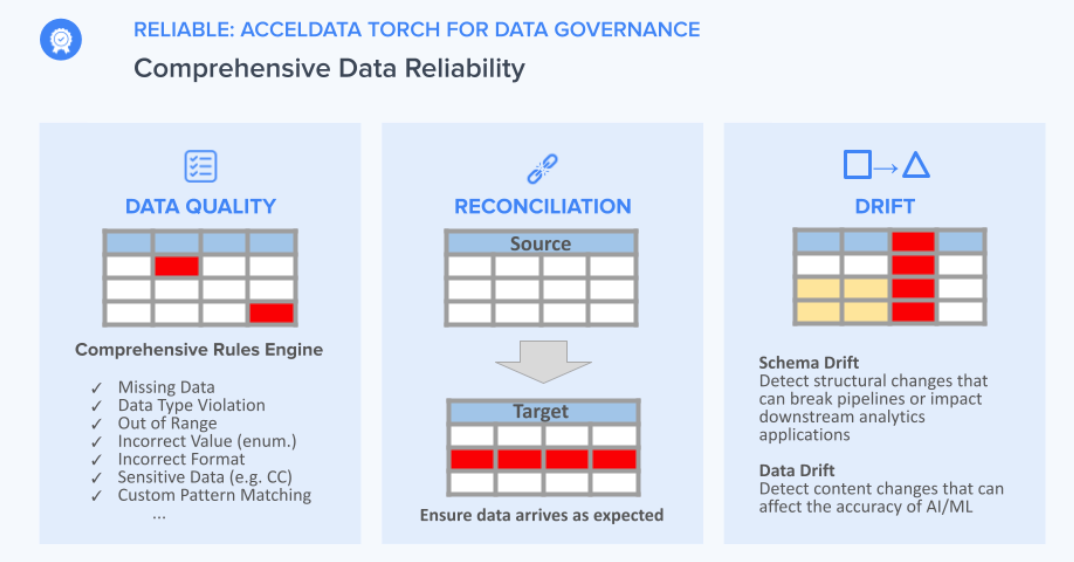

With Acceldata, data teams can leverage AI to create a custom rules-based engine based on what your business operations need. This can help data teams automatically flag missing, incorrect, and inaccurate data records.

The Acceldata Data Observability Platform help data teams reconcile data records with their sources. It can help data teams analyze the root cause of unexpected behavior changes by comparing application logs, query runtimes, or queue utilization statistics. It can also help detect structural or content changes that can result in either schema drift or data drift, and in turn, avoid broken data pipelines as well as unreliable data analysis. And it can automatically detect anomalies.

How to Optimize Data Quality?

Poor data quality is a recurring problem for all data-driven enterprises, irrespective of size or scale. But companies take one of two extreme approaches to solve their data quality problems.

On one extreme, technology companies like Airbnb, LinkedIn, and Uber end up investing several million dollars and years of effort to create their own proprietary data quality management platform.

And on the other extreme, most enterprises today rely only on manual interventions. So they don’t use a platform that can a) automatically address data quality problems at scale, b) offer a unified view of how data gets transformed, and c) detect data drift or anomalies automatically.

Creating your own data quality platform is sub-optimal because most companies can’t or won’t invest millions of dollars and wait for two years to reap the results. At the same time, not using a data quality platform that scale with your data needs can be even more disastrous. Because those problems will come back to bite you as unreliable data and increased data handling costs.

But there is a better way out. Enterprises looking to improve data quality can integrate a data observability solution, in a few days, at the cost of one full-time employee.

Integrating data observability into your business operations will create the necessary environment and feedback loop needed to improve data quality, at scale, on an ongoing basis. It will also help your enterprise make the most out of all the data quality best practices your data team adopts.

Request a free personalized demo to understand how Acceldata can help your enterprise improve business outcomes by improving data quality.

.png)

.webp)

.webp)